Edge cloud strategies for SaaS

SaaS must scale infrastructure, ensure security, and comply with regulations while innovating. Strategies include on-prem solutions for control, enhancing observability, and using edge computing to reduce latency and improve performance.

On this page

Software is eating the world, and Software as a Service (SaaS) is a big part of that. But being a SaaS organization isn’t all rainbows and high profit margins – SaaS companies must navigate a landscape of constant turmoil. Despite the widespread adoption and undeniable benefits of cloud-based software delivery, the industry grapples with relentless challenges that eat away at their performance, reliability, and productivity.

Key challenges include scaling infrastructure to meet growing demand, ensuring robust security against sophisticated cyber threats, and maintaining compliance with an ever-evolving regulatory environment. In addition, fierce competition and rapidly changing customer expectations compel SaaS providers to continually innovate, often disrupting their own business models to stay ahead. This dynamic environment highlights the paradox of a mature industry that remains in a state of perpetual flux, driven by its own success and external pressures.

Scaling, performance, and cost

Providers often struggle to scale their infrastructure efficiently and cost-effectively without sacrificing performance. This can result in latency issues, service disruptions, and a potential loss of customer loyalty. Managing hybrid and multi-cloud environments adds complexity, making it hard to maintain seamless data synchronization and consistent user experiences across different platforms.

While this report covers various challenges, we will specifically address the following three areas and their impact on observability, performance, and flexibility:

1. Less cloud, more control

SaaS companies continue to shift more of their stack off of the big cloud providers and back to on-premises and hybrid solutions due to a growing desire to manage their infrastructure. This move helps address strategic and operational challenges because on-premises infrastructures give these companies greater control over their data environments.

2. Better visibility for better decisions

Observability is crucial for real-time system insights and performance monitoring, letting companies react before an issue becomes a problem or starts to impact other systems. For SaaS companies, real-time visibility enhances reliability and user satisfaction while making internal operations roles easier and less stressful.

3. Benefitting by moving more to the edge

Caching isn’t just for static content anymore. Even SaaS companies can greatly benefit from implementing more advanced caching at the network edge. Embracing edge computing can enhance the user experience and significantly improve application performance. Doing more at the edge reduces latency by storing frequently accessed data and workloads closer to end-users, resulting in faster load times, greater origin offload, and reduced bandwidth usage.

Architecture flexibility gives SaaS providers more control

Hybrid cloud and on-premise solutions are growing in popularity in SaaS organizations for several compelling reasons.

1. Greater control over data security and compliance

Many industries, particularly those handling sensitive information like finance and healthcare, are subject to stringent regulatory requirements that mandate where and how data must be stored and processed. Hybrid cloud solutions offer the flexibility to keep sensitive data on-premises or in private clouds while leveraging the scalability and cost-effectiveness of public clouds for less sensitive workloads. This balance helps SaaS providers meet compliance requirements while benefiting from cloud technologies' efficiencies.

2. Ability to optimize performance and reduce latency

By keeping critical applications and data closer to end-users, providers can significantly enhance the user experience. This is particularly important for applications requiring real-time processing or high transaction volumes. Furthermore, the hybrid model allows for more resilient disaster recovery and business continuity planning. In a public cloud outage, on-premises or private cloud resources can ensure critical services remain operational. Additionally, some SaaS providers are looking to reduce their dependency on a single cloud provider to mitigate risks associated with vendor lock-in, ensuring greater flexibility and negotiating power. The hybrid and on-premises approaches enable SaaS providers to tailor their infrastructure more precisely to their specific operational needs and strategic goals.

3. Customization, security, and compliance

On-prem solutions provide SaaS providers complete control over their hardware and software environments, enabling them to customize their infrastructure to meet specific performance and security requirements. This level of control can help with management of sensitive data and regulatory compliance. On-prem infrastructure can be optimized for high performance and low latency, ensuring critical applications run smoothly and efficiently. Additionally, on-prem and hybrid deployments often allow for greater customization and integration with existing enterprise systems, facilitating seamless operations and enhanced service delivery.

4. Greater flexibility

SaaS providers can achieve unparalleled flexibility by integrating public and private clouds with their on-prem infrastructure. They can dynamically allocate resources based on demand, scaling up during peak times using the public cloud and relying on private cloud or on-prem solutions for sensitive or critical workloads. This approach optimizes cost efficiency and enhances resilience and disaster recovery capabilities. Hybrid cloud environments enable SaaS companies to store and process data in the most appropriate setting, balancing performance, security, and compliance needs. This strategic mix allows SaaS providers to deliver robust, reliable, high-performing services while remaining agile and responsive to changing market conditions and customer requirements.

Avoiding the next “rip-and-replace”

The "rip and replace" strategy, which involves completely discarding an existing system or technology in favor of a new one, presents several significant risks for enterprise companies. While the promise of modernized systems and improved efficiencies may be enticing, this approach often comes with considerable challenges and dangers. One of the most immediate risks is the high upfront cost. Replacing an entire system requires a substantial financial investment in new hardware and software and necessary consulting, training, and support services. Enterprises might underestimate the full scope of these costs, leading to budget overruns. Additionally, unexpected expenses arise from unforeseen complications during implementation, further straining financial resources. Implementing a new system can also cause significant disruptions to business operations with potential downtime, reduced productivity, heavy burdens on customer service and support, and a negative impact on revenue. Employees may face a steep learning curve with the new technology, leading to other declines in efficiency and potential errors as they adapt to new processes.

SaaS organizations moving off of an over-dependence on big cloud providers with hybrid and multi-cloud strategies don’t want to immediately make the same mistakes in other parts of their architecture. Edge cloud providers are a key place to be wary of overdependence on a technology vendor that is more interested in lock-in and less interested in providing flexibility. To avoid the next painful rip-and-replace, it’s important to evaluate edge cloud providers based on how they enable more flexibility and make things like multi-CDN approaches easier. If their products are designed to box you in, that’s just a painful fix looming in your future.

Multi-CDN for even more flexibility

Implementing a multi-CDN strategy can significantly enhance SaaS companies' resilience, redundancy, and failover capabilities. Companies can ensure continuous availability and optimal performance by distributing content delivery across multiple CDN providers, even if one provider experiences outages or performance issues. This redundancy minimizes the risk of downtime and ensures that end-users have a seamless experience, regardless of geographic location or network conditions. Additionally, a multi-CDN setup enables intelligent traffic routing, automatically diverting traffic to the best-performing or most reliable CDN at any given time. This improves load balancing and enhances security by mitigating DDoS attacks and other network threats. Overall, a multi-CDN approach provides a comprehensive solution for optimizing content delivery.

It's important not to let the tail wag the dog when making critical architectural decisions. Your Content Delivery Network (CDN) and security vendors should not dictate or hinder your strategic choices. While these vendors are essential for enhancing performance and ensuring security, they should support and complement your broader architectural vision rather than constrain it. Allowing them to unduly influence key decisions can lead to suboptimal outcomes, where the infrastructure is shaped more by vendor limitations and preferences than by your organization's needs.

When implementing multi-CDN strategies, not all CDNs know how to play nicely together. Some help you preserve features across your implementation, while others force you to work in a stripped-down product. Look for the CDN and edge cloud option that can help you implement a more feature-rich multi-CDN strategy and avoid vendors that make you strip out too many features to get the flexibility you need. It's important to focus on your overarching goals and ensure that external services integrate seamlessly into your system without compromising its integrity, scalability, or functionality. Balancing vendor capabilities with your strategic objectives will help you build a robust, flexible, and future-proof architecture.

Purchasing efficiency on cloud marketplaces

When expanding or upgrading your infrastructure, if hyperscaler cloud environments are still part of your plan, you should consider using vendors who are purchasable through online cloud marketplaces like AWS Marketplace, Google Cloud Marketplace, and Microsoft Azure Marketplace. This integration reduces deployment time and operational complexity. Additionally, you can use the credits you already have locked into those environments to purchase something that would have come out of cash reserves otherwise. Sometimes you can even get a discounted rate depending on the terms of the marketplace, while receiving the exact same product and features if you had purchased from the vendor directly.

Visibility and informed decisions

In edge computing, observability refers to the capability to gain comprehensive insights into the internal state and performance of edge systems and applications by collecting, analyzing, and interpreting data from various sources, including logs, metrics, and traces. This process is vital for monitoring and understanding the behavior of these systems in real time to ensure they operate correctly, efficiently, and securely.

Achieving effective observability in edge computing presents a unique set of challenges. The sheer volume of data generated can be overwhelming, making it difficult to collect, process, and analyze efficiently. The decentralized nature of some edge environments complicates obtaining a unified view of the system's state. Additionally, low-end edge architectures have limited processing power and storage, which can restrict observability capabilities.

Another issue SaaS companies face is the delay in data collection and processing. Traditional monitoring tools might provide metrics and logs, but these are often siloed and lack the real-time capabilities needed for swift decision-making. As a result, when the data is compiled and analyzed, the information may already be outdated, leading to missed opportunities or delayed responses to critical issues. It’s important to note that observability software systems vary across tools and implementations, leading to differences in their insights. Some observability solutions use sampled data, collecting only a subset of available telemetry data to reduce storage and processing costs. However, this may fail to capture the complete system behavior, potentially missing critical anomalies or performance issues. In contrast, comprehensive observability tools collect and analyze full-fidelity data, ensuring a more accurate understanding of the system's health and performance. Furthermore, the complexity of modern SaaS architectures, which often involve microservices and distributed deployments, adds another layer of difficulty in correlating data from different sources to get a comprehensive view of system performance and user experience. Organizations must carefully assess their observability needs and choose tools that offer the depth and granularity required to maintain robust and reliable systems.

Observability is crucial for maintaining the health, security, and efficiency of edge systems. It combines metrics, logs, and traces to provide comprehensive real-time insights into system operation. Despite challenges related to data volume, distribution, and resource constraints, using specialized tools and advanced techniques ensures effective monitoring and management of edge computing environments.

The significance of observability in edge computing cannot be overstated. It enables real-time monitoring, which is crucial because edge environments often operate in dynamic and distributed contexts, necessitating continuous oversight to ensure optimal performance. Observability also facilitates the rapid detection and resolution of issues, minimizing downtime and service disruptions. Furthermore, it enhances security by enabling the detection and analysis of anomalies and potential threats, allowing for proactive responses. Observability insights can also be leveraged to optimize resource usage and improve system efficiency. Observability can significantly help SaaS companies overcome these challenges by providing a more integrated and real-time view of their systems. Unlike traditional monitoring, which typically focuses on individual components, observability aims to offer end-to-end visibility into the entire application stack. This includes collecting and analyzing data from logs, providing a holistic view of how applications perform and how users interact with them.

Better observability benefits SaaS companies in two critical ways:

Real-time monitoring and analysis enable faster detection and resolution of issues, minimizing downtime and improving the reliability of the service. This is crucial in maintaining customer trust and satisfaction, as even minor disruptions can lead to significant customer churn.

Observability provides deeper insights into user behavior and system performance, helping companies identify patterns and trends that inform strategic decisions. For instance, understanding how users interact with certain features can guide product development and prioritize enhancements that deliver the most value.

Using Web Application Firewalls to Provide Actionable Insight

Web Application Firewalls (WAFs) can enhance observability by acting as an ingestion log. They provide real-time data that is logged before those actions are handled or arrive at origin servers. In contrast, traditional data analytics tools serve as lagging indicators where you only see the data after it has hit your origin. WAFs are designed to monitor, filter, and block HTTP traffic to and from a web application, providing a rich data source on web traffic, user behavior, and potential security threats.

Utilizing WAFs as an ingestion log can offer several advantages over traditional data analytics tools:

1. WAFs see it first

WAFs operate inline, inspecting traffic in real time. This allows them to capture and log data as it happens, providing immediate insights into users' behavior and potential threats. Traditional data analytics tools, in contrast, often work on data that has been collected, stored, and then processed, introducing a delay between events and their analysis. This delay can result in missed opportunities for real-time decision-making and threat mitigation.

2. Detailed data, and lots of it

Additionally, as an ingestion log, WAFs provide detailed information about the requests and responses that pass through them. This includes metadata such as IP addresses, request headers, payloads, and response codes, which can be invaluable for understanding user behavior and identifying anomalies. Traditional analytics tools aggregate data to identify trends, but they may not capture the granularity needed to diagnose specific issues or threats as they arise.

3. Fast insights power fast responses

By leveraging the logging capabilities of WAFs, organizations can gain immediate insights into security events, such as attempted SQL injection attacks, cross-site scripting (XSS), and other common threats. Traditional analytics tools might flag such events only after they have been processed and analyzed, potentially allowing threats to go unaddressed for critical periods.

4. Easy integrations let your teams do more

Organizations can integrate WAF logs with their broader observability platforms to maximize the benefits of using WAFs as ingestion logs. By doing so, they can correlate real-time WAF data with other metrics and logs from their applications and infrastructure, gaining a comprehensive view of their system’s health and performance. By integrating WAF logs with other observability tools, organizations can achieve a more responsive, secure, and optimized web application environment. This integrated approach enhances the ability to detect, diagnose, and respond to issues swiftly and effectively.

Fastly Next-Gen WAF is GitGuardian’s top secret-keeping tool

GitGuardian secures applications with Fastly next-Gen WAF eliminating false positives.

A better CDN can boost SaaS performance

SaaS companies can benefit significantly from caching data, even when dealing with high volumes of unique data. With SaaS, much more of the data is distinctive compared to a digital publisher who is sending out the same article or image to every online reader. However, there is still a lot that is possible with more advanced caching techniques, and many queries and requests are still repetitive. Caching can temporarily store the results of frequent queries, reducing the need to query the database repeatedly. This can save database processing time and resources, improving overall performance. By strategically caching non-unique or frequently accessed data, SaaS applications can significantly reduce the time and cost required to respond to end users. For example, while individual user data may be unique, certain types of data, such as configuration settings, static assets, and commonly accessed resources, can be cached to provide faster access. Even user data could be stored at the edge after a first query if it’s likely to be queried multiple times over the rest of a session.

Caching data offers several benefits, including reducing the load on your origin servers, which leads to cost savings in infrastructure and egress traffic. When switching to Fastly, customers have seen increases in offload of 10% or more. While this might seem like a relatively small improvement, it's important to understand the significant impact it can have. For example, increasing origin offload from 80% to 90% can lead to a 50% reduction in traffic directed to the origin servers. This means that instead of 20% of the traffic handled by the origin servers, only 10% will be directed to them. To put it in perspective, if the original traffic is 100 units, with 80% offload, the origin servers process 20 units of traffic. However, with 90% offload, the origin servers will only process 10 traffic units. This results in a 50% reduction in the load on the origin servers, leading to improved performance, reduced latency, easier handling of traffic spikes, and fewer potential bottlenecks.

Better caching helps during peak usage times as well by reducing the maximum spikes in traffic to origin as more is served from cache. This can improve performance and availability during periods of high demand. Caching can also enhance user experience by reducing load times. For instance, even if each user has unique data, aspects of the user interface and shared resources can be cached, ensuring that users experience minimal delays when interacting with the application. Additionally, certain computations or data transformations can be cached after the initial computation. For example, generating a complex report or performing a resource-intensive calculation once and caching the result can prevent repeating the same heavy processing for subsequent similar requests. By caching frequently accessed yet unique data for short periods, the load on backend systems can be significantly reduced, with critical benefits for high-traffic applications where even a small reduction in database queries can greatly ease the backend load.

Caching can also improve the scalability of SaaS applications. By delegating repetitive data retrieval tasks to a caching layer, backend systems can accommodate more users and complex operations without sacrificing performance. Effective implementation strategies include developing intelligent cache key strategies, using short-lived caching, employing a multi-layered caching approach, and ensuring proper cache invalidation strategies.

How API caching works:

API caching temporarily stores the responses to API requests in a cache, such as in-memory, database, or external caching services. When a client makes a request to the API, the caching layer first checks if there's a cached response available for that specific request. This check typically involves examining parameters, headers, and the request URL to generate a cache key. If a cached response is found and is still considered valid according to caching policies, it's immediately returned to the client without querying the backend server. This bypassing of the backend server saves time and resources, leading to quicker response times.

If no cached response is available or the cached response is considered outdated (for example, expired), the request is sent to the backend server. The backend server then processes the request and creates a new response, which is stored in the cache for future use. This ensures that future requests with the same parameters can be served from the cache, improving efficiency. API caching can be implemented at different levels, such as client-side caching within the client application, server-side caching using in-memory caches, or edge caching closer to the client using CDNs or reverse proxy servers.

Strategically caching API responses can greatly enhance the performance, scalability, and reliability of organizations' APIs. This results in faster response times, reduced server load, and an improved user experience. Additionally, API caching is essential for managing traffic spikes and lessening the strain on backend servers, making it a vital element of modern web applications and services.

Increasing developer performance and customer trust

SaaS companies can benefit greatly from integrating Continuous Integration and Continuous Deployment (CI/CD) pipelines with a CDN that supports CI/CD and offers instant purging capabilities. CI/CD automates and streamlines the process of code integration, testing, and deployment, enabling rapid and reliable updates to software. When combined with a CDN capable of instant purging, SaaS providers can ensure that the latest code changes and content updates are swiftly and efficiently propagated across all edge servers worldwide. This integration minimizes the latency between deploying new features or bug fixes and their availability to end users, enhancing the overall user experience. Instant purging removes outdated or erroneous content immediately, reducing the risk of serving stale or incorrect data. Consequently, this synergy between CI/CD and a responsive CDN infrastructure boosts operational agility, improves site performance, and helps maintain a high service reliability and security standard.

SaaS companies can and should rely on the ability to revert to previous configurations when making changes to their infrastructure or technology stack. This is essential for maintaining service reliability and minimizing disruptions. Changes in architecture or technology can lead to unexpected issues, affecting performance and user experience. The capability to roll back to a stable state quickly ensures uninterrupted service and builds customer trust. Robust rollback procedures also allow for more confident experimentation and innovation, as developers and engineers can test new solutions knowing they can easily revert changes if needed. Rollback capabilities are crucial for risk management, operational resilience, and promoting agile development.

Better site performance and resilience with better caching

The ability to serve stale data from cache during an outage significantly benefits SaaS companies, primarily in maintaining service continuity and user satisfaction. When real-time data access is disrupted, having a cache of slightly outdated but still functional data ensures that users can continue operating without interruption. Some functionality is far better than no functionality. This capability is crucial for applications where constant availability is essential, such as in productivity tools, financial services, or customer support platforms. By providing stale data, SaaS companies can mitigate the impact of server downtimes, network issues, or backend failures, thereby preserving the user experience and trust. Additionally, this approach reduces the strain on recovery systems and allows for more controlled and efficient troubleshooting and restoration processes. Ultimately, serving stale data during outages enhances overall service resilience and reliability, ensuring critical operations persist despite technical challenges.

Flexible security solutions are critical

With the increasing prevalence of cyber threats, ensuring the security of customer data is paramount. This involves implementing strong encryption and access controls and continually updating security measures to counteract evolving threats. Many providers struggle to maintain compliance with diverse regulatory requirements across different regions, which can necessitate significant adjustments to their infrastructure. Additionally, the need for continuous monitoring and rapid incident response constantly strains resources, requiring sophisticated tools and skilled personnel. Balancing these security demands while maintaining cost-effective and efficient operations remains a persistent challenge for many SaaS providers.

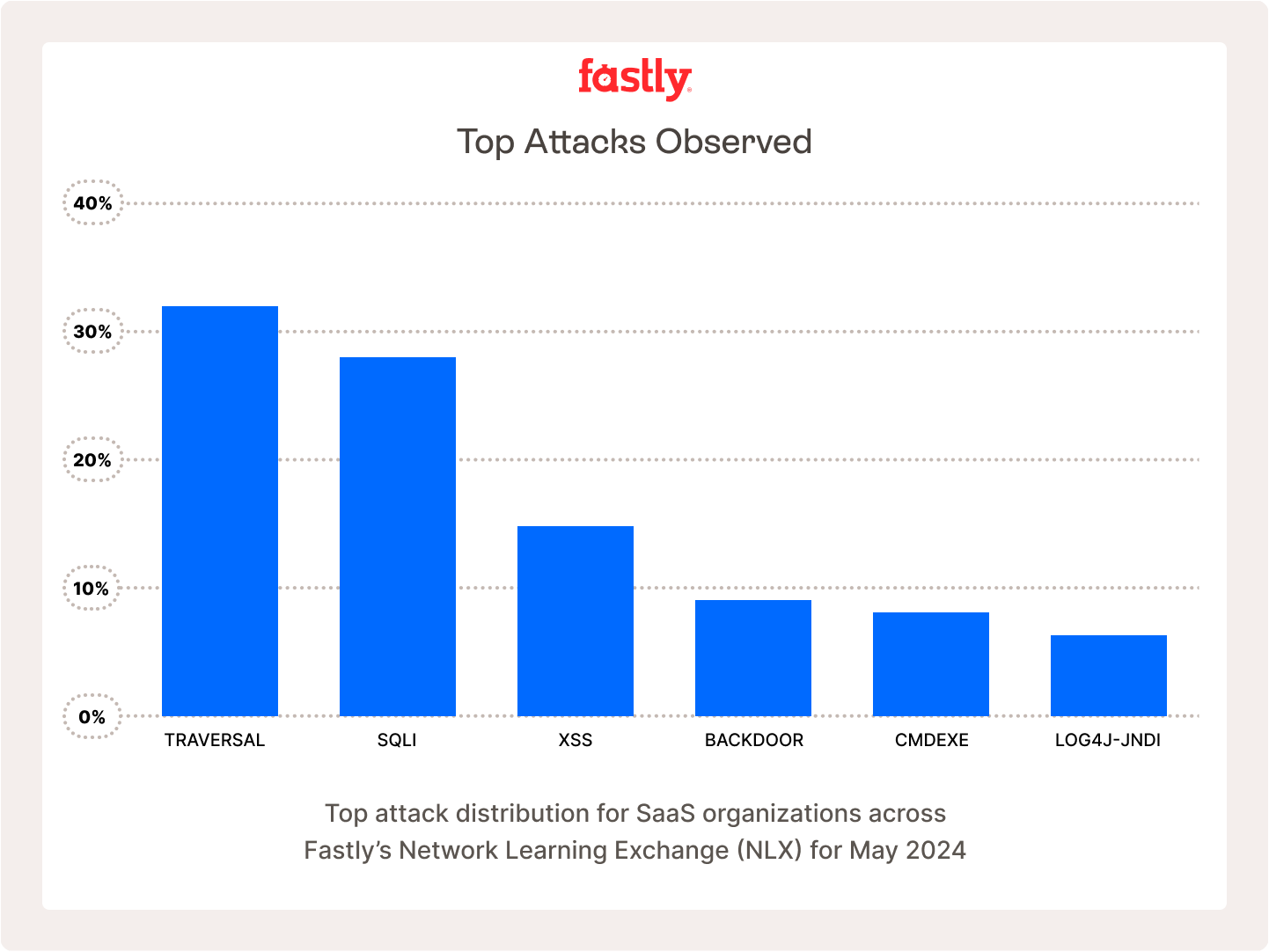

That would be hard enough to handle, but attack types and strategies are changing constantly and requiring security teams to constantly evolve their responses. Below you can see the top attacks against SaaS organizations in May of 2024, but the problem for teams is to adapt as the attacks change and shift every day efficiently. Here are the top attacks against SaaS organizations in May of 2024, but there’s no guarantee that June will look the same.

Account takeovers are another significant concern. Many bot management solutions make claims, but it’s important to select one that prevents account takeovers to protect end users, and prevent unauthorized access that may put even more user data at risk. They can detect and block credential stuffing attacks, where attackers use stolen usernames and passwords to gain unauthorized access to user accounts. By analyzing login attempts for patterns indicative of automated attacks, such as rapid login attempts from multiple IP addresses, bot management solutions can prevent account takeovers.

Preventing unauthorized account creation is a huge money-saver for many SaaS organizations, as it immediately removes the costs associated with fraudulent or fake accounts spun up by bots.

There are many bot solutions on the market, but selecting an edge cloud platform with a built-in bot capability can save you time, money, and energy by integrating bot mitigation with your other security operations and observability. Additional efficiency from vendor consolidation and unified customer support can make it a no-brainer. When evaluating the bot solutions, ensure they cover all of the following capabilities.

Good bot solutions utilize behavioral analysis to identify suspicious activity during the login process. For example, they can detect anomalies in login behavior, such as unusual login times or locations, multiple failed login attempts, or deviations from typical user behavior. By flagging such activity, bot management solutions help prevent unauthorized access to user accounts. Additionally, bot management solutions can incorporate CAPTCHA challenges or require users to undergo multi-factor authentication (MFA) if they exhibit suspicious behavior during the login process. These additional verification steps help ensure that only legitimate users can access their accounts, thus minimizing the risk of automated bot takeovers. Bot management solutions can enforce rate limits on login attempts to prevent brute-force attacks. These attacks involve attackers systematically trying multiple username/password combinations to guess valid credentials. By limiting the number of login attempts within a certain time period, these solutions thwart brute force attacks and protect user accounts from unauthorized access.

In addition, modern bot management solutions can utilize device fingerprinting techniques to identify and recognize devices used for login attempts. By analyzing device attributes such as IP address, browser type, user agent, and cookies, these solutions can identify suspicious login attempts coming from unfamiliar or previously unseen devices, thus helping to prevent unauthorized access to accounts.

By analyzing registration patterns and detecting anomalies that indicate automated bot activity, they can help prevent fraudulent account creation. For instance, they can spot patterns like rapid registration attempts from a single IP address, the use of disposable email addresses, or registration with known malicious domains. Moreover, bot management solutions can integrate with threat intelligence feeds to identify known malicious IPs, domains, or user agents associated with account takeover attempts or fraudulent account creation. By cross-referencing incoming login attempts or registration requests against these threat intelligence feeds, these solutions can proactively block malicious activity and protect user accounts.

Finally, bot management solutions enable swift action to prevent account takeovers or unauthorized account creation by providing real-time monitoring of login and registration activities and sending alerts or notifications to security teams or administrators when anomalous activity is detected. Through these capabilities, bot management solutions help SaaS providers enhance the security of their platforms, protect user accounts from unauthorized access, and prevent fraudulent account creation attempts.

Recommendations and recap

The Software as a Service (SaaS) industry has big challenges, but finding efficiencies in these areas can present huge wins. Moving more to the network edge can help with scaling infrastructure efficiently, ensuring robust security, and maintaining compliance amidst evolving regulations. The competitive landscape and changing customer expectations drive constant innovation and business model disruption, so selecting an edge cloud platform must account for flexibility in the future to avoid costly lock-ins like SaaS has experienced as they move away from their overreliance on cloud architectures.

Key strategies for overcoming these challenges include:

Regaining Control: Some SaaS providers are returning to on-premises solutions to better manage their data environments and address performance and security needs.

Flexible Development: Embracing faster and more flexible development practices allows SaaS companies to quickly deliver new features and stay competitive.

Edge Computing: Utilizing edge caching reduces latency by storing data closer to users, improving application performance and user experience.

Infrastructure and Performance

On-premises infrastructure offers enhanced control, security, and compliance.

Hybrid cloud strategies combine public and private cloud benefits, optimizing cost-efficiency and resilience. Will let you go where you want (trends)

A multi-CDN approach improves content delivery reliability and performance.

A gradual transition will minimize risk and prevent you end up in a situation where change is painful

Flexible, Software-defined and programmable,

Choose a partner that is tightly integrated with online marketplaces such as GCP or AWS.

Security

Cybersecurity remains a top priority, with solutions like bot management helping to prevent account takeovers and unauthorized account creation.

Observability in edge computing provides real-time insights, improving system performance and security.

Consider upgrading to security solutions that can run both on-prem and in the cloud to accommodate infrastructure changes.

Caching

Caching can significantly improve performance by storing frequently accessed data closer to end-users.

API caching reduces server load and response times, enhancing user experience and handling traffic spikes effectively.

CI/CD Integration

Integrating CI/CD pipelines with CDNs ensures rapid and efficient updates, enhancing service reliability and performance.

When combined with instant purge development teams can quickly revert to a stable state rapidly if a deployment causes issues.

Conclusion

The SaaS industry faces ongoing challenges such as scaling infrastructure, ensuring security, complying with regulations, and meeting evolving customer expectations. This dynamic environment requires constant innovation and adaptation. Strategies like embracing edge computing, utilizing hybrid cloud models, and implementing observability and caching are crucial for maintaining performance, flexibility, and security. The industry's ability to balance these demands while optimizing performance and ensuring user satisfaction highlights its resilience and forward-thinking nature. As SaaS providers navigate these complexities, their strategic decisions and technological advancements will define their success in an ever-changing market landscape.

Ressources connexes

Using Fastly’s edge cloud platform, SaaS companies can build and deliver performant and safe experiences.

Envie d’améliorer vos performances ou la sécurité ? Consultez ce guide pour découvrir les meilleures solutions.

Fastly’s Next-Gen WAF delivers broad, highly accurate protection without required tuning.

With Fastly Origin Inspector and Domain Inspector, customers can easily monitor every origin and domain.

Meet a more powerful global network.

Our network is all about greater efficiency. With our strategically placed points of presence (POPs), you can scale on-demand and deliver seamlessly during major events and traffic spikes. Get the peace of mind that comes with truly reliable performance — wherever users may be browsing, watching, shopping, or doing business.

410 Tbps

Edge network capacity1

150 ms

Mean purge time with Instant Purge™

>1.8 trillion

Daily requests served4

~90% of customers

Run Next-Gen WAF in blocking mode3

As of December 31, 2024

As of December 31, 2019

As of March, 2023

As of July 31, 2023

Support plans

Fastly offers several support plans to meet your needs: standard, gold and enterprise.

Standard

Free of charge and available as soon as you sign up with Fastly.

Gold

Proactive alerts for high-impact events, expedited 24/7 incident response times, and a 100% uptime Service Level Agreement (SLA) guarantee.

Enterprise

Gives you the added benefits of emergency escalation for support cases and 24/7 responses for inquiries (not just incidents).