Qu’est-ce que la mise en cache ?

Un cache est un endroit où des données sont stockées de façon temporaire afin de pouvoir être récupérées plus rapidement quand on a besoin d’y accéder. La mise en cache correspond au processus de stockage de ces données. Le terme de cache est parfois aussi utilisé dans un contexte plus général pour décrire un endroit où l’on stocke des choses par commodité. Des campeurs ou des randonneurs peuvent par exemple « cacher » des réserves de nourriture le long d’un sentier. Mais étudions plus précisément ce qu’est la mise en cache en ligne.

Dans un monde parfait, un utilisateur qui consulterait votre site Internet disposerait en local de toutes les données nécessaires pour afficher le site. Cependant, ces données sont situées ailleurs, peut-être dans un centre de données ou un service cloud, et les récupérer a un coût. Pour l’utilisateur, ce coût se traduit en latence (le temps nécessaire pour générer et charger les données), pour vous, en argent. Ces frais comprennent les coûts associés à l’hébergement de votre contenu, mais aussi ce qu’on appelle les coûts de sortie, c’est-à-dire les coûts liés au déplacement des données du lieu de stockage lorsqu’elles font l’objet de requêtes de la part des visiteurs. Plus votre site Internet reçoit de trafic, plus vous devez payer pour diffuser du contenu en réponse à des requêtes, nombre d’entre elles pouvant être identiques.

C’est là que la mise en cache s’avère utile, car elle vous permet de stocker des copies de votre contenu pour accélérer sa distribution, tout en nécessitant moins de ressources.

Fonctionnement de la mise en cache

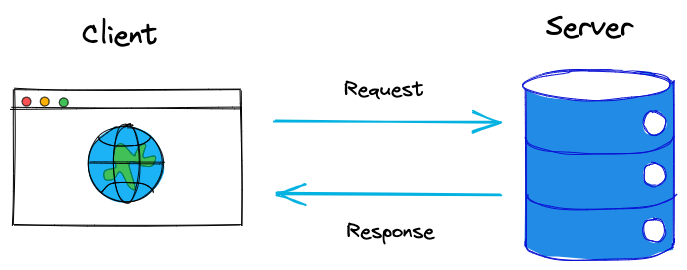

Pour comprendre le fonctionnement de la mise en cache, il faut d’abord comprendre le fonctionnement des requêtes et des réponses HTTP. Lorsqu’un utilisateur clique sur un lien ou saisit l’URL de votre site web dans la barre d’adresse, cela envoie une requête de son navigateur (le client) à l’endroit où le contenu est stocké (votre serveur d’origine). Le serveur d’origine traite ensuite la requête et envoie une réponse au client.

Cela peut sembler instantané, mais cela prend en réalité du temps au serveur d’origine pour traiter la requête, générer la réponse et l’envoyer au client. En outre, de nombreux actifs différents sont nécessaires pour pouvoir afficher votre site web. Cela implique d’envoyer plusieurs requêtes à destination des différentes données qui constituent votre site, notamment les images, les pages web en langage HTML et les fichiers CSS.

C’est à ce stade que le cache local de l’utilisateur entre en jeu. Son navigateur peut stocker sur son appareil certains actifs statiques, comme l’image de l’en-tête avec votre logo et la feuille de style de votre site. Les performances seront ainsi améliorées la prochaine fois qu’il accédera à votre site. Cependant, ce type de cache n’est utile qu’à un seul utilisateur.

Si vous souhaitez mettre en cache des actifs pour chaque utilisateur qui consulte votre site, vous devez envisager un cache distant, un peu comme un proxy inversé.

Quels sont les différents types de mise en cache ?

Il existe plusieurs types de mise en cache que l’on utilise à différents niveaux de l’infrastructure Internet, chacun ayant un objectif spécifique. Examinons les différentes sortes de mise en cache et voyons comment elles contribuent à proposer une expérience de navigation plus fluide.

1. Mise en cache sur un CDN

La mise en cache sur un réseau de distribution de contenu (CDN) réduit la latence en vous permettant de distribuer votre contenu depuis un endroit plus proche de l’utilisateur, ce qui diminue considérablement les temps de chargement. Elle consiste à stocker des copies du contenu sur des serveurs en périphérie qui sont répartis dans le monde entier. Dès qu’un utilisateur demande un contenu, le CDN le lui diffuse depuis le serveur périphérique le plus proche au lieu d’attendre la réponse de votre serveur d’origine. La charge de travail sur le serveur d’origine s’en trouve réduite et cela permet de prendre en charge un volume de trafic plus important en répartissant les requêtes sur plusieurs serveurs.

2. Mise en cache du côté serveur

Contrairement à la mise en cache sur un CDN, la mise en cache du côté serveur stocke des copies des données prêtes à l’emploi sur le serveur d’origine, ce qui empêche le navigateur de l’utilisateur d’avoir à redemander chaque élément de contenu à chaque fois qu’il charge une page. La capacité de stocker des objets de données réutilisables réduit le nombre d’interrogations de la base de données et améliore les performances de votre site. La mise en cache du côté serveur se présente sous plusieurs formes, notamment :

Mise en cache de pages : conservation d’une copie des pages HTML

Mise en cache d’objets : conservation d’objets de données réutilisables

Mise en cache de code opération : stockage de code PHP précompilé

Mise en cache de bases de données : conservation des résultats des interrogations des bases de données

On utilise également la mise en cache du côté serveur pour autre chose que du simple contenu, les données copiées allant de pages web complètes à de simples interrogations des bases de données.

3. Mise en cache du DNS

La résolution du DNS (Domain Name System) est le processus utilisé par les navigateurs web pour traduire un nom de domaine, comme www.Fastly.com, en l’adresse IP spécifique du serveur d’origine qui héberge le site web. Les navigateurs web utilisent la mise en cache du DNS pour augmenter la vitesse de ce processus, car elle stocke les résultats des requêtes DNS en local, ce qui permet de les résoudre plus rapidement lors des futures visites. En bref, avant de vérifier les serveurs DNS mondiaux, votre navigateur vérifie son propre cache DNS pour voir s’il connaît déjà l’adresse IP correcte. Le temps nécessaire pour résoudre les noms de domaine est ainsi considérablement réduit et l’accès à votre site web est plus rapide.

Contrairement aux deux types de mise en cache précédents, la mise en cache de DNS est localisée en fonction des utilisateurs, elle n’est pas basée sur un serveur. Elle sert aussi spécifiquement à résoudre les noms de domaine et non à distribuer du contenu ou à diffuser les interrogations des bases de données.

4. Mise en cache de contenu

La mise en cache de contenu constitue une catégorie plus générale qui comprend la duplication et le stockage de nombreux types de contenu web afin de répondre plus efficacement aux demandes des utilisateurs. La mise en cache de contenu se fait au niveau du serveur (comme pour la mise en cache du côté serveur et la mise en cache sur le CDN). Cependant, elle peut s’effectuer à différents autres niveaux, notamment :

Mise en cache dans le navigateur : stockage local de contenu web, sur l’appareil de l’utilisateur, pour permettre un (re)chargement plus rapide en cas de nouvelle visite du site.

Mise en cache sur un proxy : le contenu web est stocké sur des serveurs intermédiaires (proxys) situés sur le réseau, entre l’utilisateur et le serveur d’origine.

Mise en cache sur une passerelle : stockage d’une copie des données sur des passerelles au sein du réseau d’une entreprise.

La mise en cache de contenu varie énormément : elle peut se produire à plusieurs niveaux et concerner une large gamme de contenus, notamment des images, des vidéos et des pages HTML complètes. En facilitant l’accès aux copies du contenu, la mise en cache offre aux utilisateurs une expérience de navigation plus rapide et plus fluide, permettant un chargement plus efficace des sites et réduisant l’utilisation globale de bande passante sur le réseau.

CDN et mise en cache

La mise en cache consiste à conserver des copies des données facilement accessibles pour une utilisation ultérieure. Les systèmes réseau doivent donc savoir que ces copies existent déjà. Lorsque le contenu est trouvé dans le cache, une connexion au cache (cache hit) est établie. Les connexions au cache déclenchent automatiquement la distribution directe du contenu du serveur en périphérie à l’utilisateur, ce qui réduit les temps de chargement. Le « taux de connexion au cache » représente le pourcentage d’interrogations qui résultent en une connexion au cache.

Les CDN présentent en général des taux de connexion au cache supérieurs à ceux des autres systèmes de mise en cache côté serveur. Cela est dû au fait qu’ils déploient de nombreux serveurs en périphérie à l’échelle mondiale, positionnés de façon stratégique à proximité des utilisateurs finaux. Les CDN s’adressent en général à une très grande base d’utilisateurs. Il existe donc une forte probabilité qu’un utilisateur situé dans une zone géographique similaire ait déjà généré le stockage d’une copie des données sur le serveur périphérique de cette région.

Cependant, une connexion au cache n’est pas toujours le résultat de la requête d’un utilisateur. Dans certaines situations, le serveur n’est pas en mesure de distribuer le contenu depuis la périphérie et doit, par conséquent, le récupérer sur le serveur d’origine. On parle alors d’« échec de connexion au cache » et cela peut se produire pour différentes raisons :

Première requête (cache vierge)

Invalidation ou purge du cache

Limites de capacités

Mises à jour du contenu

Un échec de connexion au cache peut également survenir en raison d’un contenu qui est arrivé à expiration. Le contenu est mis en cache sur un CDN selon une valeur TTL (Time-to-Live, durée de vie). Lorsque cette durée de vie est atteinte, le contenu est supprimé du cache. Les développeurs/développeuses et les ingénieur(e)s réseau définissent les valeurs TTL en fonction du type de contenu mis en cache. Le contenu dynamique, comme les articles d’actualité, a tendance à présenter une TTL courte, de quelques secondes ou quelques minutes. Au contraire, les TTL des caches CDN sont plus longues et s’expriment en jours ou en semaines, car leur contenu (images, vidéos, CSS et données JavaScript) est statique.

Faut-il vider le cache ?

Dans certains cas, il peut être bénéfique de vider le cache, mais ce n’est pas toujours nécessaire ni recommandé. Nous indiquons ci-dessous les raisons les plus courantes de vider le cache.

Libérer de l’espace de stockage

En local, les données en cache peuvent s’accumuler avec le temps et occuper trop d’espace de stockage sur votre système. Par exemple, cela peut s’avérer particulièrement problématique avec les appareils personnels comme les ordinateurs portables ou netbooks qui disposent d’une capacité de stockage limitée. En vidant le cache, il est souvent possible de libérer de l’espace de stockage précieux, pour un meilleur fonctionnement de votre appareil.

Remédier aux problèmes de performance

Au fil du temps, les données en cache peuvent être corrompues ou devenir obsolètes. Les sites web se chargeront alors plus lentement ou connaîtront des interruptions, ce qui vous empêchera d’accéder aux fonctions essentielles. Le fait de vider le cache permet souvent de résoudre ces problèmes et de supprimer les fichiers corrompus ou obsolètes.

Améliorer la confidentialité

Les données en cache comprennent des données sensibles comme les identifiants de connexion, l’historique de navigation et d’autres données privées. Vider votre cache est un moyen rapide de protéger ces données confidentielles en les supprimant de votre système.

Dans certains cas, cependant, il n’est pas nécessaire ni conseillé de vider votre cache.

1. Perte de données

Lorsqu’un utilisateur vide son cache, il supprime les données, ce qui permet aux sites web et aux applications de se charger plus rapidement. L’inconvénient lorsqu’on vide le cache, c’est que les sites web et les applications devront à nouveau charger ces ressources depuis le serveur d’origine. Les temps de chargement s’en trouveront rallongés tant que le cache ne sera pas reconstitué.

2. Solution temporaire

Il est possible de résoudre certains problèmes de performance en vidant le cache, mais cette solution est souvent temporaire. Si le problème sous-jacent n’est pas résolu, les problèmes de performance surviendront à nouveau lorsque le cache sera reconstitué. Il est indispensable d’identifier et traiter la cause fondamentale pour obtenir une solution à long terme.

3. Perte des avantages en matière de performance

L’amélioration de la performance est l’un des principaux avantages de la mise en cache. La suppression des données de votre cache peut entraîner la perte de cette valeur ajoutée.

Comment la mise en cache est-elle utilisée par Fastly ?

Fastly utilise la mise en cache dans son CDN pour accélérer la distribution de votre contenu à votre public. En stockant des copies exactes de votre contenu sur ses serveurs répartis dans le monde entier, Fastly réduit la latence et la charge des serveurs pour garantir à vos utilisateurs un accès rapide depuis le serveur le plus proche.

Les fonctionnalités de mise en cache de Fastly comprennent Instant Purge™ pour des mises à jour rapides du contenu et des règles personnalisables de mise en cache. L’approche de Fastly améliore l’expérience utilisateur grâce à un accès plus rapide au contenu, tout en réduisant les frais de bande passante et de sortie.

En savoir plus sur la mise en cache avec les CDN