Have you ever wondered how effective your WAF (Web Application Firewall) really is? Wondered if it really even stops attacks? How many false positives does it produce? Did a recent change improve or adversely affect existing detection capabilities?

Most WAF technology is pretty difficult to manage, with long lists of regular expressions that really no one enjoys maintaining. So when you start from a negative position, it’s natural if these kinds of questions start making you think about not using a WAF at all.

We decided to address these questions with a WAF efficacy framework, which is the subject of this post. The framework provides a standardized way to measure the effectiveness of a WAF’s detection capabilities through continuous verification and validation. It helps identify gaps and provides a feedback loop for improvements and maintenance. It incorporates assessments of simulated attacks to test different attack types and distills the results into overall scores. These scores feed into a workflow for continuous improvement that can be used as a spot check of current efficacy or for trend analysis of efficacy as it evolves over time.

Our approach

A WAF inspects HTTP/S traffic before it reaches an application server. You can think of a WAF as the intermediary between an app and a client that analyzes all communication between them. It monitors for suspicious and anomalous traffic and protects against attacks by blocking requests based on specific rules so that unwanted traffic never reaches your application.

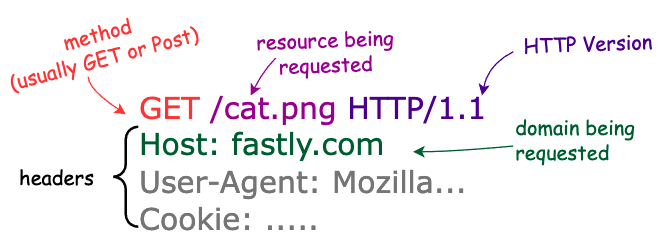

An HTTP/S request includes:

A domain (like fastly.com)

A resource (like /cat.png)

A method (GET, POST, PUT, etc)

Headers (extra information sent to the server, also a place for attackers to put bad stuff)

There’s also an optional request body; GET requests usually don't have bodies while POST requests — which could send legitimate information or inject unwanted stuff — tend to contain bodies.

To visualize this, here’s a HTTP 1.1 GET request for fastly.com/cat.png:

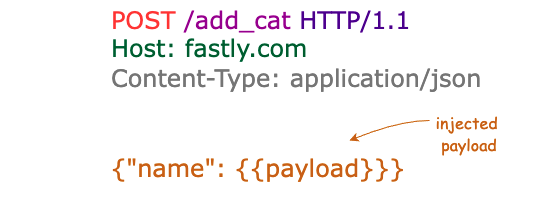

And this is an example POST request with a JSON body:

Whether it’s the URL, body, cookies, or other HTTP headers, attackers are always looking for places in a request to set the stage for exploitation. This is why a main principle of the framework is to test whether payloads are detected in different positions of a request.

As with all security technology, when detection methods get stronger, attackers seek ways to work around the detections so they can continue to achieve their goals. With WAFs, one popular evasion method is to encode a payload in a way that bypasses detection. This is why we also include tests that incorporate a mix of encoding techniques. We inject each predefined payload into each payload position and both the raw and encoded versions of the request are sent to the target — providing a valuable means to test detection coverage even in the presence of evasion.

But, of course, a magical payload fairy doesn’t just drop payloads from the sky — and relevant payloads can change over time as attack methods evolve. We suggest PayloadsAllTheThings, PortSwigger, Nuclei, exploit-db, and Twitter as a starting point to cultivate a list of payloads as well as to routinely update your payload lists.

You don’t need a vulnerable app to test the efficacy of a WAF. The goal is to measure the effectiveness of a WAF’s detection capabilities, not test whether vulnerabilities are present in the applications themselves. After all, the dream end state with a WAF is that vulnerabilities in your apps no longer lead to incidents; validating that your WAF protects against attack classes means you don’t have to sweat the small stuff like specific vulnerabilities anymore. Therefore, using a simple HTTP request and response service like https://httpbin.org is sufficient for this purpose.

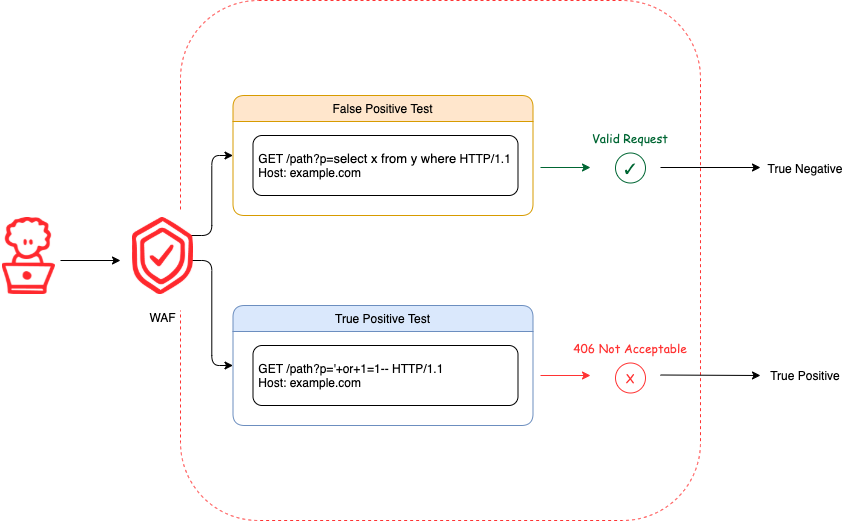

For each attack type, we test two different cases to evaluate WAF efficacy: true positives and false positives. A true positive test ascertains whether attack payloads are correctly identified. A false positive test ascertains whether acceptable payloads are incorrectly identified.

To determine whether the WAF correctly identifies a request, we examine the response status code. Most WAF solutions should support creating a custom response status code for blocked requests (for an example of this, read our documentation on it). If your WAF solution isn't capable of setting custom response codes, it’s worth reconsidering that investment.

Within the context of our WAF efficacy framework, we specifically look for the receipt of a 406 Not Acceptable response code when a request is blocked. In a true positive test case, receiving a response code other than 406 is considered a false negative. Conversely, in a false positive test case, receiving a response code other than 406 is considered a true negative. These results are used to calculate an efficacy score per attack type; these scores are then aggregated into an overall score for WAF efficacy across all attack types.

Evaluating results

Once you generate individual and aggregate efficacy scores, how do you turn these metrics into knowledge that can inform an action plan? One important lens through which you should evaluate these results is your organization’s priorities. Does your organization prefer maximizing traffic to their application, even if some attacks slip through the cracks; or does it prefer blocking as many attacks as possible, even if that results in reduced traffic? From what we’ve seen across our customer base, the preference is usually to maximize traffic: a reduction in traffic can impact revenue, which is the worst impact from the perspective of the business.

In practice, infallible protection from web attacks is impossible — unless you quite literally block every request, which defeats the fundamental purpose of running an app on the internet. This is why finding the accurate balance between false positives and false negatives is a key factor in determining the efficacy of a WAF solution. Every security tool has false positives and false negatives. If it were possible to be 100% certain in all cases, security would be a solved problem.

The higher the false positive rate, the more likely you are to detect an attack — but legitimate traffic will be incorrectly identified as an attack, a problem that will only be exacerbated in blocking mode. Compounding this, a false positive is like a false alarm. By nature, humans will start tuning out alerts after enough false positives, which not only increases the likelihood of real attack traffic remaining unaddressed but also erodes the return on investment of your WAF. There’s also a cognitive price to pay: dealing with false alarms leads to burnout, which makes it harder for people to perform well and can cause greater turnover on teams.

On the flip side, a higher false negative rate means a lower chance of false positives, which results in high-fidelity alerts — but also means there’s a higher chance that real attack traffic won’t be detected.

To account for the disparity between these negative and positive outcome classes, our WAF efficacy framework incorporates a metric called balanced accuracy. Balanced accuracy accounts for the imbalance in classes and is a good measure when you are indifferent between correctly predicting the negative and positive cases. Your job is to use these metrics sensibly as it applies to your business and the systems you protect. We’ll discuss how balanced accuracy is calculated in a section that follows.

Automating away toil

Manual testing is far from easy and can swiftly spiral into an infeasible task when trying to account for all the ways you can test and measure results. This is especially true when working with large data sets of attack payloads. Tests should be reproducible and flexible, allowing you to focus on the results to adapt your web appsec strategy based on tangible evidence.

To that end, we chose an open source project called Nuclei as the framework’s underpinnings. Nuclei takes care of many of the daunting, manual, repetitive tasks of testing through its use of simple YAML-based templates. Nuclei templates define how the requests will be sent and processed. They’re also fully configurable, so you can configure and define every single thing about the requests that will be sent.

Since gleaning new knowledge is vital to fuel feedback loops, every request is recorded and logged in JSON format. The logs include request/response pairs and additional metadata. You can use these logs for dashboarding, historical comparisons, and other insights that help you improve your WAF strategy. As an example, we uploaded the results to a Google Cloud Storage (GCS) bucket, serving as a dataset to create a table in BigQuery; from there, we connected the data to Data Studio to generate informative reports with scores.

Since automation enables scalability and repeatability — and saves you and your team from tedious toil — we recommend stitching these steps together to create a CI/CD pipeline for performing WAF efficacy tests. You can define a workflow with the following steps:

Build your test target

Run efficacy tests

Save the results to a backend of your choosing

Generate reports

Rinse and repeat at your preferred cadence

Getting started

To share this capability with the broader security community, the Fastly Security Research Team created an open source project called wafefficacy, which includes the initial code and templates you need to get started. The project provides boilerplate examples for Command Execution (cmdexe), SQL Injection (sqli), Traversal (traversal), and Cross-Site Scripting (xss) — ensuring you can immediately kick off efficacy tests for the major attack types.

There are two requirements to check before performing an efficacy test:

The WAF you’re testing must be configured to block attacks

A response status code must be set for when a request is blocked. By default, wafefficacy checks for the receipt of 406 Not Acceptable.

Once you've completed your initial setup, you can run the executable binary from the project directory by providing a target url or host:./wafefficacy run -u https://example.com

When the assessment is complete, the script will display score results in the following standard output:

Efficacy score

Let’s break down how we calculate the efficacy scores using balanced accuracy as our metric.

We start with a confusion matrix:

In the above, the “positive” or “negative” in TP/FP/TN/FN refers to the prediction made, not the actual class. (Hence, a “false positive” is a case where we wrongly predicted positive.)

Balanced accuracy is based on two commonly used metrics:

Sensitivity (also known as the true positive rate) answers the question: “How many of the positive cases did I detect?”

Specificity (also known as true negative rate or 1 - false positive rate) answers the same question: “How many of the negative cases did I detect?”

Let’s use an example to illustrate how balanced accuracy can be a better judge of detections in the imbalanced class setting. Assume we simulate SQL injection attacks against a WAF and we get the results shown in the confusion matrix below:

This confusion matrix illustrates that from 750 true positive test cases, 700 of them signaled as true positives while 50 of them signaled as false negatives. Alongside of this, from 105 false positive test cases, 5 of them signaled as false positives while 100 of them signaled as true negatives.

Using this information, here is the computation for balanced accuracy:

Based on the calculation of balanced accuracy the WAF is approximately 94.3% effective in providing protection against SQL Injection attacks.

For the sake of comparison, let’s assume we tested another WAF and the false positive results were inverted. For instance, out of 105 false positive test cases, 100 of them signaled as false positives while 5 of them signaled as true negatives.

This would change the percentage of specificity as followed:

Which would also change the percentage for balanced accuracy as followed:

Based on balanced accuracy, we could say with confidence that the first WAF is doing better (94.3%) than the second (49.1%) at providing protection against SQL Injection attacks.

Summary

It’s best to test your security before someone else does. Our WAF efficacy framework embraces the notion of continuous verification and validation. Through the use of automated attack simulations, it validates technical security controls, identifies gaps, and provides a way to report and measure tool efficacy. In fact, the same methodology can be applied against any security tool relevant to your company or systems.

Performing efficacy tests in this manner is a way of introducing controlled and safe tests that allow you to observe how well your controls will respond in real-world conditions. It can be an extremely valuable tool for introducing a feedback loop to help understand whether a control was implemented correctly and effectively.

We encourage you to take advantage of our process and tools in order to better understand and hopefully improve the effectiveness of your WAF.

If you would like to learn more about how we can help with your web application security needs, check out our security product overview. And if you’re interested in working with us, explore our job openings.

The Cloud Native AppSec Playbook

Understand the increasingly sophisticated techniques that attackers use to target enterprise web applications and how to prevent them. This playbook guides engineering, operations, and security teams with the “how” and “why” of cloud native application security. Download the eBook