With our new next-compute-js library, you can now host your Next.js application on our Compute@Edge platform – giving you the benefits of both the Next.js developer experience and our blazing-fast, world-wide edge network, and you don't even need an origin server.

Next.js is a popular JavaScript-based server framework that gives the developer a great experience – the ability to write in React for the frontend, and a convenient and intuitive way to set up some of the best features you need for production: hybrid static & server rendering, smart bundling, route prefetching, and more, with very little configuration needed.

Why use Next.js at all?

Frameworks like Next.js are an incredibly convenient way to build modern websites. Let's create a React component that says “Hello, World!” and serve it from the root of a website. First, you’re going to need Node.js. Create a directory, and use npm to install a few dependencies:

mkdir my-app

cd my-app

npm -y init

npm install react react-dom nextLet’s create a couple of directories to hold our content:

mkdir pages

mkdir publicNow, create a file at pages/index.js with the component.

export default function Index() {

return (

<div>Hello, World!</div>

);

};Now with the file created, let’s go ahead and run the development server:

npx next devAnd point your web browser to http://localhost:3000/.

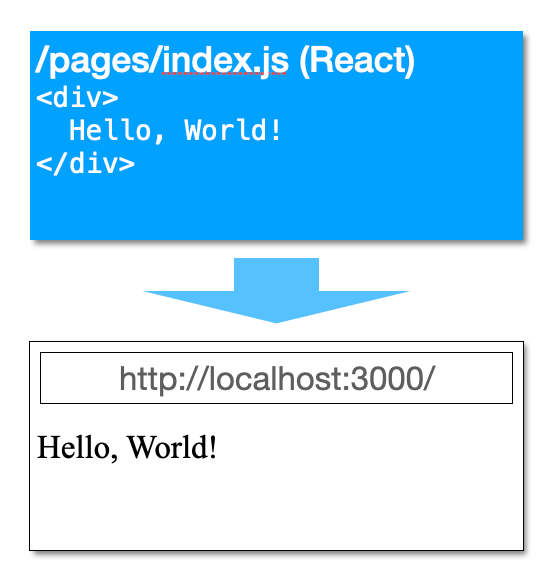

Amazing, isn’t it? Actually, it really is amazing. This may not look like much, but we’ve set up a full development environment with React. Simply by having a file at pages/index.js, the Next.js server renders the react component, and its output is displayed to the browser.

You now have the full power of React in front of you. You can now create custom components, bring in some components you’ve written before, or load third-party ones. And if you make changes to this pages/index.js file, the changes are hot-reloaded, so you can get immediate feedback in your browser during development.

Next.js lets you route your paths by convention – by placing files in the file system, the file at the corresponding location will be served up by Next.js. So if you add a similar file at pages/about.js, then it will be available at http://localhost:3000/about. If you want dynamic segments in your paths, that’s easy, too — create pages/users/[id].js (yes, with brackets in the filename) — and the component in that file will receive id through the props passed into that component. Do you want to serve static files, like images, fonts, or a robots.txt file? Just place those files under the directory named public, and those files will be served as is.

Next.js comes with a load of features: Built-in CSS support, Layouts, Server-Side Rendering, and MDX support, just to name a few, many of which require very little to no configuration. Next.js even allows you to add API Routes—your API in JavaScript, routed by placing JavaScript files under pages/api/.

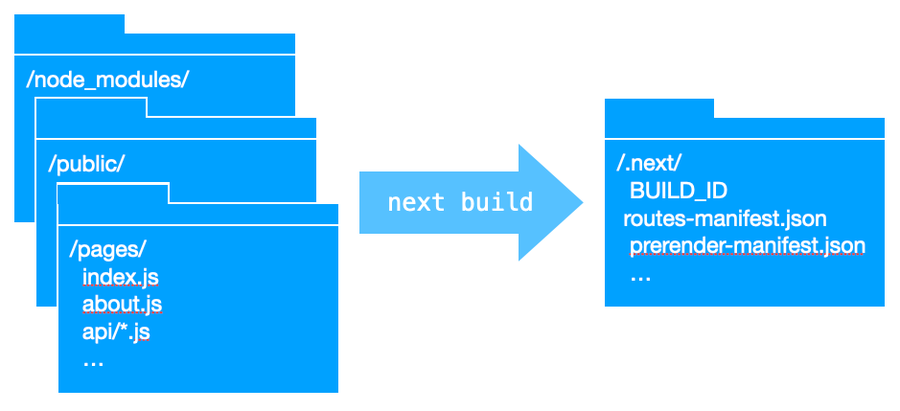

When you’re ready to go to production, you build your site to a set of intermediate files:

npx next buildThis will compile and build all of the files needed to run each page of your site, and place them in a directory named .next. It applies a bunch of neat optimizations, like code splitting, so that just the minimum necessary amount of JavaScript is sent to the browser when loading a particular page. And Next.js pre-renders all the React components that it can using Server-Side Rendering — if you’ve ever tried to set that up on your own, you know how much of an awesome time-saver this feature is. I won’t go into the details of everything that lives in .next, but it’s genius.

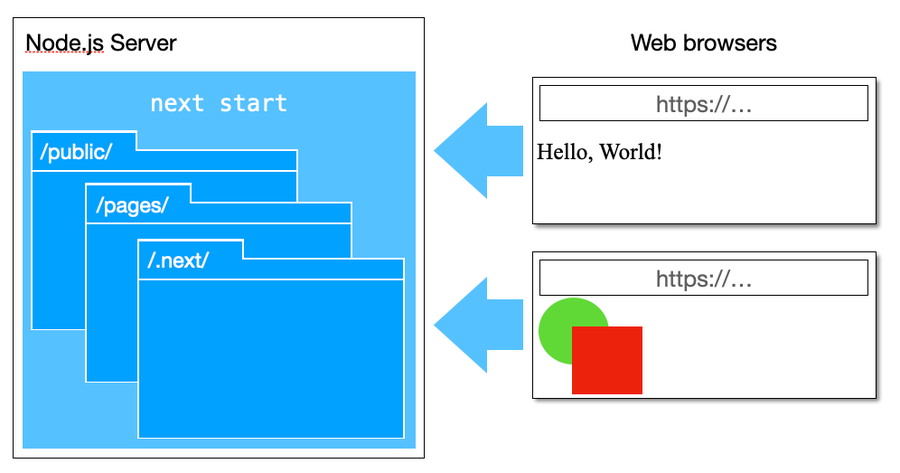

Once your site’s artifacts are built, you can start serving the site with:

npx next startCongratulations, your optimized site is now up and running on a local Node.js server.

That’s great, but as you can see, Next.js is designed to be run on a Node.js server. If you are willing to do without some of the dynamic runtime features, you can also get it to export a set of static output files (using next export), which can be uploaded as a bundle of files to be served from a traditional web server.

But what if you use Fastly? We're not a traditional web server, though you can certainly serve static sites through Fastly (and we’ll have some exciting news about this soon!), but we run Wasm binaries that you can compile from JavaScript. So can we serve Next.js's built output AND execute the server-side components at the edge using Compute@Edge? We can indeed!

Introducing @fastly/next-compute-js

Say hello to the newest addition to our growing library of developer tools. next-compute-js provides a combination of project-scaffolding and a Next.js runtime for our Compute@Edge platform. It operates on the exact same built artifacts from the next build command, and aims to provide all of the features of the Next.js runtime (except for the bits that are dependent on the Vercel Edge runtime—which currently requires a full Node.js runtime).

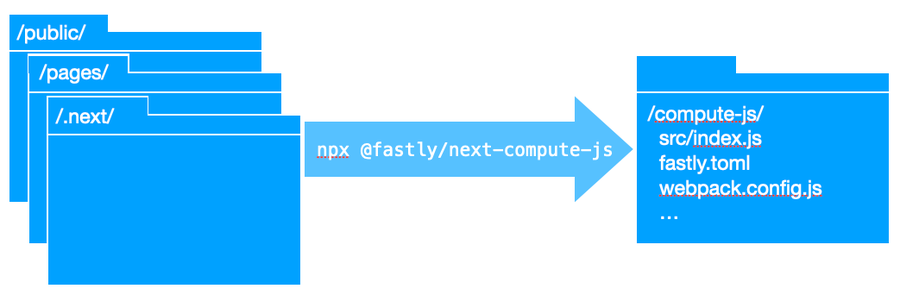

It’s very simple to use. Let’s continue from the example above. After you’ve run the next build command, you should have a .next directory. Let’s run next-compute-js like so:

npx @fastly/next-compute-jsThe output will indicate that a Compute@Edge application is being initialized in a new directory at compute-js. Dependencies will be installed, and a few moments later, you’ll be returned to the command prompt.

Let’s try running this in the Fastly development server. Assuming you have the Fastly CLI installed, simply run the fastly compute serve command:

cd compute-js

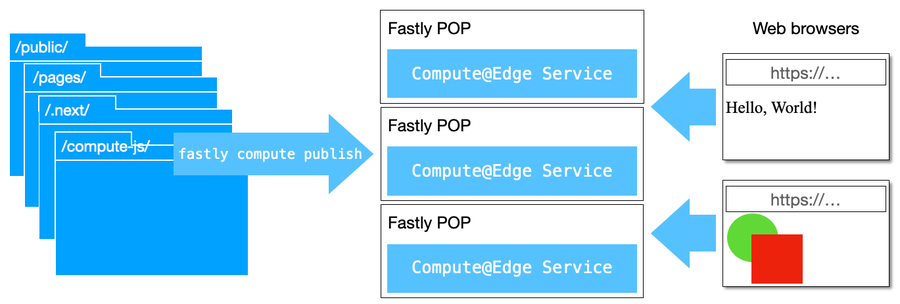

fastly compute serveAnd that’s it. Your Next.js application is now running as a Compute@Edge application (albeit still on your machine). And what if you want to go to production? If you guessed fastly compute publish you would be absolutely correct:

# This is equivalent to 'vercel deploy --prebuilt'

# if you were deploying to Vercel.

cd compute-js

fastly compute publish

In fact, I would recommend that you go ahead and add the following two lines to the scripts section of your Next.js project’s package.json file, so that commands to build, and then test or deploy your Next.js project are always at your fingertips:

{

"scripts": {

"fastly-serve": "next build && cd compute-js && fastly compute serve",

"fastly-publish": "next build && cd compute-js && fastly compute publish"

}

}And that’s really all there is to using this simple tool.

Develop as normal using next dev. Test with npm run fastly-serve, and when you’re ready, deploy to the cloud with npm run fastly-publish.

Wait! No origin server?

That’s right, you read that correctly. next-compute-js packages your entire .next directory as part of your Wasm binary, and all of your content is available worldwide on Fastly’s edge nodes at the geographic point closest to your visitor, without the need for an origin server for the Next.js application. Truly the best of both worlds — great developer experience, and great production performance.

In case you’re interested in how it works, this is a custom implementation of the NextServer class provided by Next.js that works by loading the appropriate files from the Wasm binary.

What features of Next.js are supported?

We have just about everything covered:

Static File Routing

Static and Dynamic Routed React Pages

Router / Imperative Routing / Shallow Routing / Link

Static Generation without Data

Server-Side Generation with Static Props / Static Paths

Server-Side Rendering with Server-Side Props

Client-Side fetching and SWR

Built-in CSS / CSS Modules

Compression (gzip)

ETag generation

Headers / Rewrites / Redirects / Internationalized Routing

Layouts

Font Optimization

MDX

Custom App / Document / Error Page

API Routes / Middleware

The following features are not yet supported, but are on the radar:

Image Optimization

Preview Mode

Due to platform differences, we don’t have plans at the current time to support the following features (but never say never!)

Edge API Routes / Middleware

Incremental Static Regeneration

Dynamic Import

Even API Routes?

Yes, even API routes. Next.js allows us to write APIs in JavaScript, and make them available using the path directories just as you would with pages.

Let’s try it! Add a file to your project at pages/api/hello/[name].js (yes, with brackets in the filename):

export default function handler(req, res) {

const { name } = req.query;

res.statusCode = 200;

res.end(JSON.stringify({message: `Hello, ${name}!`}));

}

Once you've added that, run the program:

npm run fastly-serveNow, try visiting http://127.0.0.1:7676/api/hello/World, and you will see the following.

{"message": "Hello, World!"}You can see that the URL to call the API maps directly to the path of the file, and that the value of the dynamic segment [name] is accessible through the req.query object. Super simple.

And in case you were wondering about the request and response objects passed to the API route handler we use @fastly/http-compute-js to give you Node.js-style req and res objects, and then extend those with the API Route Request Helpers to let you write these handlers to be compatible with next dev. The stream interface of the req object is wired up to the Compute@Edge Request’s body stream. And when the handler finishes sending output to the res object, the sent data, along with any headers and status code, are packed into a Response object which can then be served by Compute@Edge.

With this, and all of the other features of Next.js that we are able to support, Compute@Edge is a compelling platform for your Next.js application.

Your Next.js application has found a new home

The result is a seamless integration between Next.js and Compute@Edge – you can now host your Next.js application on Compute@Edge, without even needing an origin server. You can benefit from the awesome features of Next.js during development, and then go to Fastly Compute@Edge for the worldwide presence and world’s fastest edge computing platform for your production deployment.

In the next post, we’ll be taking a look at @fastly/compute-js-static-publish, one of the tools we briefly mentioned in this post, which allows you to serve entire static sites from the edge.

At Fastly, we continue to build tools that empower you to run even more code at the Edge and develop for it faster, while enabling the use of a wider range of tools. We’d love to know if you’re using these tools, and what you're doing with them. Reach out to us on Twitter and let us know!