If you build stuff on Fastly, chances are you spend a decent amount of time on our Developer Hub. Last month, we migrated it from our VCL platform to Compute. Here's how we did it and what you can learn from it.

First, it's important to stress that migrating away from VCL is not necessarily the right thing for you — both our VCL and Compute platforms are actively supported and developed, and VCL offers a fantastic setup experience that will give you great defaults for a fast site with zero code. However, if you want to start doing more complex tasks at the edge, you'll probably need the general purpose compute capabilities of Compute.

Before you start on the new stuff though, you'll probably want to bring the things you are doing in VCL over to your Compute service. In this post, I’ll look at how to migrate all the patterns used by developer.fastly.com from VCL to Compute, which makes for a nice example case study.

Groundwork

Since the existing developer.fastly.com service is VCL (and is a production service that we don't want to break!), the first step is to create a new Compute service that we can test with. This starts with installing the Fastly CLI and initializing a new project:

> fastly compute init

Creating a new Compute project.

Press ^C at any time to quit.

Name: [edge] developer-hub

Description: Fastly Developer Hub

Author: devrel@fastly.com

Language:

[1] Rust

[2] AssemblyScript (beta)

[3] JavaScript (beta)

[4] Other ('bring your own' Wasm binary)

Choose option: [1] 3

Starter kit:

[1] Default starter for JavaScript

A basic starter kit that demonstrates routing, simple synthetic responses and

overriding caching rules.

https://github.com/fastly/compute-starter-kit-javascript-default

Choose option or paste git URL: [1] 1

✓ Initializing...

✓ Fetching package template...

✓ Updating package manifest...

✓ Initializing package...

Initialized package developer-hub to:

~/repos/Developer-Hub/edgeThe Developer Hub is mostly a JavaScript application using the Gatsby framework, so we chose to write our Compute code in JavaScript too. The app that gets generated by the init command is a complete and working app, so it's worth deploying it to production immediately to get a nice develop-test-iterate cycle going:

> fastly compute publish

✓ Initializing...

✓ Verifying package manifest...

✓ Verifying local javascript toolchain...

✓ Building package using javascript toolchain...

✓ Creating package archive...

SUCCESS: Built package 'developer-hub' (pkg/developer-hub.tar.gz)

There is no Fastly service associated with this package. To connect to an existing service add the Service ID to the fastly.toml file, otherwise follow the prompts to create a service now.

Press ^C at any time to quit.

Create new service: [y/N] y

✓ Initializing...

✓ Creating service...

Domain: [some-funky-words.edgecompute.app]

Backend (hostname or IP address, or leave blank to stop adding backends):

✓ Creating domain some-funky-words.edgecompute.app'...

✓ Uploading package...

✓ Activating version...

Manage this service at:

https://manage.fastly.com/configure/services/*****************

View this service at:

https://some-funky-words.edgecompute.app

SUCCESS: Deployed package (service *****************, version 1)So now we have a working service, served from the Fastly edge, and can deploy changes to it in a few seconds. Let's start migrating!

Google Cloud Storage

The main content of the Developer Hub is the Gatsby site, which is built and deployed to Google Cloud Storage, then served statically. We can start by adding a backend:

fastly backend create --name gcs --address storage.googleapis.com --version active --autocloneNow we edit the Compute app's main source file — in this case src/index.js — to load the content from GCS. We can start by replacing the entire content of the file with this:

const BACKENDS = {

GCS: "gcs",

}

const GCS_BUCKET_NAME = "fastly-developer-portal"

async function handleRequest(event) {

const req = event.request

const reqUrl = new URL(req.url)

let backendName

backendName = BACKENDS.GCS

reqUrl.pathname = "/" + GCS_BUCKET_NAME + "/production" + reqUrl.pathname

// Fetch the index page if the request is for a directory

if (reqUrl.pathname.endsWith("/")) {

reqUrl.pathname = reqUrl.pathname + "index.html"

}

const beReq = new Request(reqUrl, req);

let beResp = await fetch(beReq, {

backend: backendName,

cacheOverride: new CacheOverride(["GET", "HEAD", "FASTLYPURGE"].includes(req.method) ? "none" : "pass"),

})

if (backendName === BACKENDS.GCS && beResp.status === 404) {

// Try for a directory index if the original request didn't end in /

if (!reqUrl.pathname.endsWith("/index.html")) {

reqUrl.pathname += "/index.html"

const dirRetryResp = await fetch(new Request(reqUrl, req), { backend: BACKENDS.GCS })

if (dirRetryResp.status === 200) {

const origURL = new URL(req.url) // Copy of original client URL

return createRedirectResponse(origURL.pathname + "/")

}

}

}

return beResp

}

const createRedirectResponse = (dest) =>

new Response("", {

status: 301,

headers: { Location: dest },

})

addEventListener("fetch", (event) => event.respondWith(handleRequest(event)))This code implements our recommended pattern for fronting a public GCS bucket, but let's step through it:

Define some constants. The

BACKENDSobject is a nice way for us to make the routing extensible for the other backends that will come later. The value "gcs" here matches the name we gave the backend earlier.For convenience, we'll assign the client request

event.requesttoreq, and using the URL class we can turn req.url into a parsed URL object,reqUrl.To reach the right object in the GCS bucket, we add the bucket name to the beginning of the path. In our case, we also had to add 'production', since we also store non-production branches in our GCS bucket.

If the incoming request ended with

/, it makes sense to append 'index.html' to find directory index pages within the bucket.If the response from Google is a 404, and the client request did not end in a slash, we try adding '/index.html' to the path and try again. If it works, we return an external redirect to tell the client to append a slash.

At the very end, we attach the request handler function to the client 'fetch' event.

We compile and deploy:

> fastly compute publish

✓ Initializing...

✓ Verifying package manifest...

✓ Verifying local javascript toolchain...

✓ Building package using javascript toolchain...

✓ Creating package archive...

SUCCESS: Built package 'developer-hub' (pkg/developer-hub.tar.gz)

✓ Uploading package...

✓ Activating version...

SUCCESS: Deployed package (service *****************, version 3)Now, loading some-funky-words.edgecompute.app in a browser, we see the Developer Hub! A great start.

Custom 404 page

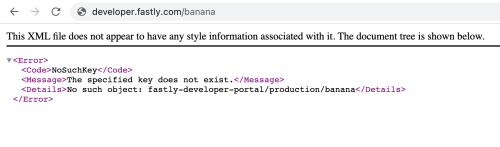

Pages that exist now work great, but if we try to load a page that doesn't exist, bad things happen:

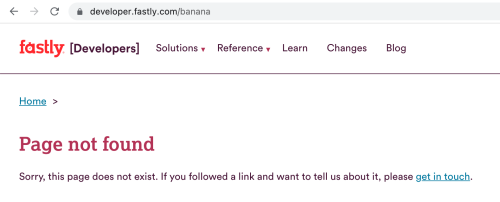

Gatsby does generate a nice 'page not found' page, and deploys it as '404.html' in the GCS bucket. We just need to serve that content if the client request prompted a 404 response from Google:

if (backendName === BACKENDS.GCS && beResp.status === 404) {

// ... existing code here ... //

// Use a custom 404 page

if (!reqUrl.pathname.endsWith("/404.html")) {

debug("Fetch custom 404 page")

const newPath = "/" + GCS_BUCKET_NAME + "/404.html"

beResp = await fetch(new Request(newPath, req), { backend: BACKENDS.GCS })

beResp = new Response(beResp.body, { status: 404, headers: beResp.headers })

}

}Note that we don't return the beResp we get directly from origin, because it will have a 200 (OK) status code. Instead, we create a new "404" Response using the body stream from the GCS response. This pattern is explored in detail in our backends integration guide.

As before, we run fastly compute publish to deploy, and now our 'page not found' errors start looking a lot nicer:

Redirects

Of course, developer.fastly.com also has a large number of redirects, so those are next up to migrate from VCL. We already use Edge Dictionaries to store them — one for exact redirects and one for prefix redirects. These can be loaded at the start of the request handler:

async function handleRequest(event) {

const req = event.request

const reqUrl = new URL(req.url)

const reqPath = reqUrl.pathname

const dictExactRedirects = new Dictionary("exact_redirects")

const dictPrefixRedirects = new Dictionary("prefix_redirects")

// Exact redirects

const normalizedReqPath = reqPath.replace(/\/$/, "")

const redirDest = dictExactRedirects.get(normalizedReqPath)

if (redirDest) {

return createRedirectResponse(redirDest)

}

// Prefix redirects

let redirSrc = String(normalizedReqPath)

while (redirSrc.includes("/")) {

const redirDest = dictPrefixRedirects.get(redirSrc)

if (redirDest) {

return createRedirectResponse(redirDest + reqPath.slice(redirSrc.length))

}

redirSrc = redirSrc.replace(/\/[^/]*$/, "")

}The exact redirects are pretty simple, but for the prefix redirects, we need to go around a loop, removing one URL segment at a time until the path is empty, and looking up each progressively shorter prefix in the dictionary. If we find one, then the non-matching part of the client request is appended to the redirect (so a redirect of /source => /destination will redirect a request for /source/foo to /destination/foo).

Canonicalize hostname

At some point, we thought it was a good idea for developer.fastly.com to also be available as fastly.dev. So our VCL service redirects users who request fastly.dev to developer.fastly.com. Let's migrate that next. We start by defining at the very top of the file what the canonical domain for the site is:

const PRIMARY_DOMAIN = "developer.fastly.com"Then, in the request handler, we read the host header, and redirect if we need to. During testing, we actually set this to the random-funky-words.edgecompute.app-style domain. This deserves to go above the redirects code, just after the declarations at the start of the request handler:

async function handleRequest(event) {

// ... existing code ... //

const hostHeader = req.headers.get("host")

// Canonicalize hostname

if (!req.headers.has("Fastly-FF") && hostHeader !== PRIMARY_DOMAIN) {

return createRedirectResponse("https://" + PRIMARY_DOMAIN + reqPath)

}Your VCL service might also be performing a TLS redirect for insecure HTTP requests. Compute handles this automatically and no longer hands off requests to your code until a secure connection exists — so no need to migrate that.

To test this code, the service needs a second, non-canonical domain attached. We do that with fastly domain create:

fastly domain create --name testing-fastly-devhub.global.ssl.fastly.net --version latest --autocloneThis is a really good use case for Fastly-assigned domains, so you don't need to mess around with DNS (yet). Now if we visit testing-fastly-devhub.global.ssl.fastly.net in the browser, our service redirects us to our primary domain.

Response headers

We then turned to the modifications that our VCL service is making to the responses that we get from GCS. Google adds a bunch of headers to responses that we don't want to expose to the client, like x-goog-generation. Since the backend might add more of these in future, it makes sense to filter the headers based on an allowlist. First, at the top of the file, we define what the allowed response headers are:

const ALLOWED_RESP_HEADERS = [

"cache-control",

"content-encoding",

"content-type",

"date",

"etag",

"vary",

]Then, just after the backend fetch, we can insert code to filter the headers we get back:

// Filter backend response to retain only allowed headers

beResp.headers.keys().forEach((k) => {

if (!ALLOWED_RESP_HEADERS.includes(k)) beResp.headers.delete(k)

})Conversely, there are some headers that we do want to see on client responses, like Content-Security-Policy, so we need to add those. Many of these only need to be present on HTML responses:

if ((beResp.headers.get("content-type") || "").includes("text/html")) {

beResp.headers.set("Content-Security-Policy", "default-src 'self'; scrip...")

beResp.headers.set("X-XSS-Protection", "1")

beResp.headers.set("Referrer-Policy", "origin-when-cross-origin")

beResp.headers.append(

"Link",

"</fonts/CircularStd-Book.woff2>; rel=preload; as=font; crossorigin=anonymous," +

"</fonts/Lexia-Regular.woff2>; rel=preload; as=font; crossorigin=anonymous," +

"<https://www.google-analytics.com>; rel=preconnect"

)

beResp.headers.set("alt-svc", `h3-29=":443";ma=86400,h3-27=":443";ma=86400`)

beResp.headers.set("Strict-Transport-Security", "max-age=86400")

}Adding and removing headers is a very common use case for both VCL and Compute services.

Passthrough backends

Another thing our VCL service does is to pass some requests to backends other than GCS, so that we can invoke cloud functions or other third-party services. As an example, we use Swiftype for our Developer Hub search engine, so to enable the search API to be available within the developer.fastly.com domain, we can add a new backend and then wire up certain request paths to target that backend.

First, we use the Fastly CLI to add the backend:

fastly backend create --name swiftype --address search-api.swiftype.com --version active --autocloneThen we add a constant to our source code that allows us to reference that backend:

const BACKENDS = {

GCS: "gcs",

SWIFTYPE: "swiftype",

}Finally, we update the backend selection code to add some routing logic to select Swiftype when appropriate:

let backendName

if (reqPath === "/api/internal/search") {

backendName = BACKENDS.SWIFTYPE

reqUrl.pathname = "/api/v1/public/engines/search.json"

} else {

backendName = BACKENDS.GCS

reqUrl.pathname = "/" + GCS_BUCKET_NAME + "/production" + reqUrl.pathname

// Fetch the index page if the request is for a directory

if (reqUrl.pathname.endsWith("/")) {

reqUrl.pathname = reqUrl.pathname + "index.html"

}

}You can repeat this for any other backends that you have. We use the same technique for Sentry and Formkeep, for example. We'll be writing about this pattern in more detail soon.

Deployment with GitHub Actions

Our VCL service was not managed in source control, so this is a good opportunity to fix that. The Compute version is stored along with the main source of the Developer Hub, allowing us to coordinate edge releases with backend updates and make them dependent so that we don't ship a change to edge logic if we fail to deploy to the backend.

We added two jobs to our CI workflow. One to build the edge app for PRs:

build-fastly:

name: C@E build

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v2

- name: Set up Fastly CLI

uses: fastly/compute-actions/setup@main

- name: Install edge code dependencies

run: cd edge && npm install

- name: Build Compute@Edge Package

uses: fastly/compute-actions/build@main

with:

project_directory: ./edge/And a second, to deploy the edge app once the GCS deploy has completed:

update-edge-app:

name: Deploy C@E app

runs-on: ubuntu-latest

if: ${{ github.ref_name == 'production' }}

needs: deploy

steps:

- name: Checkout Code

uses: actions/checkout@v2

- name: Install edge code dependencies

run: cd edge && npm install

- name: Deploy to Compute@Edge

uses: fastly/compute-actions@main

with:

project_directory: ./edge/

env:

FASTLY_API_TOKEN: ${{ secrets.FASTLY_API_TOKEN }}Next steps

The migration complete, we now have the same features as we had with the VCL service. But now that the Developer Hub is fronted by Compute, we can move all our cloud functions into our edge compute code directly and start doing more heavy lifting at the edge.

Migrating services to Compute could be easier than you think. If you're planning your own migration, see our dedicated VCL to Compute migration guide, which covers many of the patterns we had to convert for the Developer Hub, and our solutions library, which has demos, example code, and tutorials that cover a wide range of use cases.

Not using Compute yet? Check out how our serverless edge compute platform can help you build faster, more secure, and more performant applications at the edge.