We're absolutely thrilled to announce that we've acquired Fanout, a platform for building real-time apps. We've been working on and off with Justin, Fanout's CEO and Founder, and the team for more than four years, supporting the needs of multiple joint customers, but a recent project made us realize that now was the right time for them to join the Fastly team.

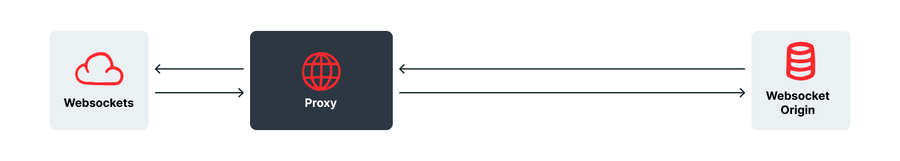

Why now? And why Fanout? We're so excited because Fanout thinks the same way we do. Despite being a very popular request, the advantages of putting a WebSocket proxy behind an edge computing network are marginal — you have the usual latency improvement and DDoS protection and it can make things easier with same-origin restrictions. However, there are very few smarts involved, and you, the customer, still have to implement your own WebSocket system at your origin.

So there are some gains for you but we felt like we could do better for our customers than the status quo solutions provided by other companies.

It comes down to the fact that dumb pipes are boring.

That's one of our founding principles. When we started Fastly, it was partly based on the fact that moving bits blindly over a network was not only boring but it didn't make sense — move the smarts to the edge, and you can do far, far more. When you don't have to go back to origin for load balancing, for paywalls, even for waiting rooms or for personalized responses, your site is more scalable, more cacheable and your users get their responses quicker.

A lesson learned from video

This is obviously true for HTML and API responses, but we were slightly surprised to find that this was also true for video. Modern video isn't the long stream of bits of RealPlayers yore; instead, video is chopped up into small chunks, and those chunks are constantly loaded and played, like a Looney Toons character laying a train track while hanging from the front of the train. Instead of a video, you load a "manifest" file that tells you some information, like what resolutions are available, how long each chunk is, and a template for generating the URL for a given timestamp in a given resolution.

The advantages of this are obvious. For example, beginning at a point mid way through the video is easy, and switching resolution mid stream is trivial, making it much more suited for places where your bandwidth might change, like on mobile.

For us it's great too. Our network is excellent at caching and delivering small objects. Our edge computing platform, both VCL and our newer Compute@Edge WASM-based platform, means you can do some really interesting things with video — everything from logging each chunk (meaning you get finer-grained analytics of how far people watched into the video) to load-balancing or failing over between encoders. There's also more impressive stuff like interleaving subtly different fingerprinted chunks from different buckets in a pattern which encodes a unique ID.

For a long time, we thought the same way about WebSockets as we did about video. That there was nothing interesting we could do other than transfer bits over our network as cheaply as possible. But then we met Fanout, and they gave us our "Aha!" moment, just like we'd had with video.

How it works

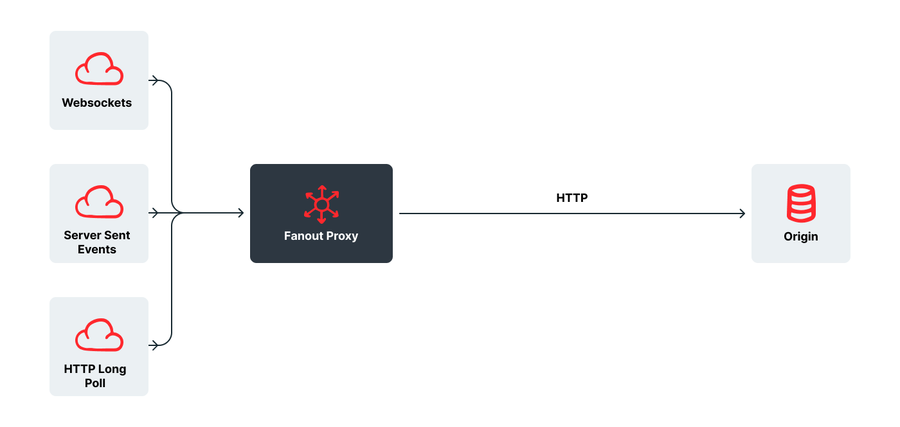

Fanout works differently. Subscription requests come in as normal (either as WebSockets, HTTP Streaming, HTTP Long Poll, or Server Sent Events) but are converted by the Fanout Proxy into regular HTTP GET requests then sent back to a designated URL on the customer's origin. The origin responds with a channel ID via a normal HTTP response, at which point the proxy finalizes the connection with the client using whatever protocol (WebSocket, SSE, etc.) they requested.

So far, so simple, and the customer only had to implement a simple API endpoint at their origin. As an added bonus, as Fanout adds more protocols (say WebRTC data, WebHooks, or Android and iOS notification) or upgrades existing ones, customers automatically get the benefit without having to do anything — just like Fastly customers automatically get support for IPv6, HTTP2, and QUIC/HTTP3.

When the origin wants to publish a message, it makes an API call to Fanout with the channel id, and the message and the proxy sends the message instantly to all connected clients subscribed to that channel — no matter what protocol they connected with.

As far as the client is concerned, this is a completely normal interaction made easier by the fact that it got to choose whatever transport it wanted (thus giving a way around any issues with firewalls or blocked ports). And the customer never needs to run their own WebSocket infrastructure with all the opex and scaling woes that entails — they just make a simple API call when there's new data.

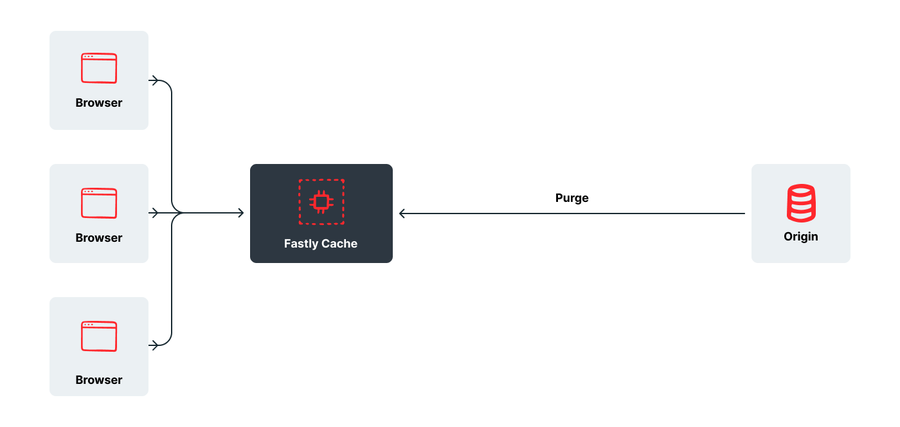

Which, come to think of it, is very similar to how Fastly Instant Purge works. You cache your content and when something changes, you make an API call that triggers a message to be sent out to all our caches with an update.

The big difference is that with real-time apps the change is sent to the client immediately rather than waiting for the client to request the content again.

Why real-time is important

At first glance it may seem like not many companies need real-time technologies like WebSockets, and that it would only be useful for apps like chat. But dig behind the scenes at huge sites and you'll find WebSockets used for all kinds of stuff. Stack Overflow, for example, uses them for all kinds of things, and sites as diverse as Trello and Quora use them for pushing updates to tickets, keeping "like" counts in sync. Facebook has stated that it uses long polling for real-time updates. Uber uses a modified version of Server Sent Events to keep things up to date. Real-time gets used in everything from breaking stories on a news site to updating a stock ticker on a finance app or keeping stock levels up to date on an ecommerce or ticketing site. And it's not just for user communication; you can also use this technology for machine-to-machine messaging applications like IoT and Sensors.

Different protocols have different advantages and disadvantages (another reason why we love Fanout's transport agnostic approach), but it's undeniable that more and more sites will want to become more immediate in the future.

Open Source

Fanout provides an Open Source implementation named Pushpin, as well as an open standard called GRIP (Generic Real-time Intermediary Protocol).

We have always believed in free software, evidenced by over a decade of contributions to Varnish, providing free services to open-source projects (everything from cURL and PyPI to the Linux Kernel Organization and the W3C), and even open sourcing our native WASM compiler and runtime Lucet, which powers Compute@Edge.

We're looking forward to continuing to support both Pushpin and GRIP and we've already had discussions about ways that we can help push the state of the art forward in an open and collaborative manner.

What’s next?

We look forward to building and growing Fanout capabilities into our 192 Tbps edge cloud network, which serves more than 1.4 trillion requests per day (as of Jan. 31, 2022).

The first step is probably to build that boring old WebSocket proxy in order to satisfy some requests for traditional WebSocket setups and give a migration path for people who've already invested in that setup. But very soon, we'll have the full Fanout proxy integrated.

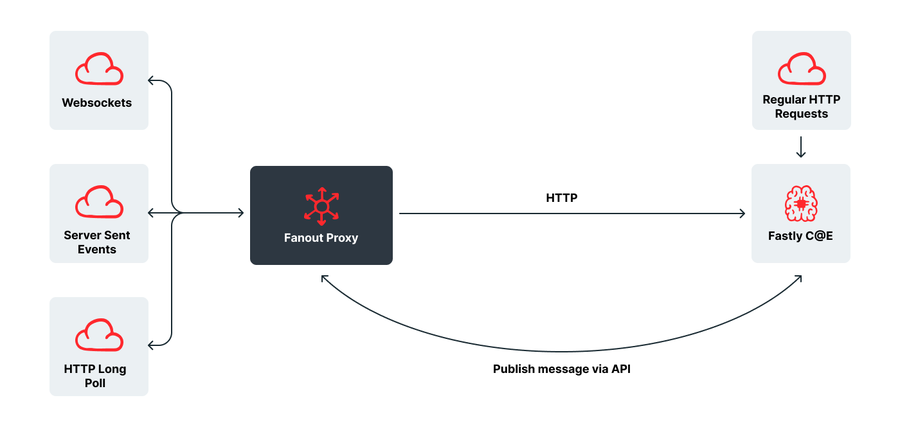

After that we'll integrate with our incredibly scalable, WASM-based Compute@Edge platform. This combination will be a massive leap forward for the real-time web, with the scale to support even the largest enterprise needs.

And this is where it gets exciting for us. Integrating with Compute@Edge gives customers the ability to add "smarts" to incoming connections. This can include everything from auth and paywalls to load-balancing, sharding, and failover. Basically, the same kind of amazing things our customers have built over the years can now be applied to real-time communications.

We also see the benefits of per-outbound message processing — the origin sends out a generic message to every connected client but, just before delivery, the message is augmented and personalized to the recipient with their personal details, geolocation-specific data, or customized interests.

But if you push this idea even further, you can imagine even cooler applications.

Since you'll be able to respond to new connections and messages entirely in Compute@Edge and also publish new messages, you'd be able to build completely serverless bi-directional messaging apps. Imagine building a Twilio or Discord style application without having to have an origin at all. That future may be much closer than you think.

As you can tell, we're very, very excited about the possibilities this partnership promises. As we integrate Fanout into our infrastructure, there may be some changes to the way things work. We’ll communicate any changes to existing Fanout customers with ample time to adjust.