Lies, stats, debunking Cloudflare | Fastly

Principal Developer Advocate, Fastly

SVP, Engineering, Fastly

VP of Technology, Fastly

A couple of weeks ago Cloudflare, one of our competitors, published a blog post in which they claimed that their edge compute platform is roughly three times as fast as Compute@Edge. This nonsensical conclusion provides a great example of how statistics can be used to mislead. Read on for an analysis of Cloudflare’s testing methodology, and the results of a more scientific and useful comparison.

It has often been said that there are three kinds of untruths: lies, damned lies, and statistics. This is perhaps unfair: Some statistics are pretty sound. But these are not:

Where to begin? Citing Catchpoint like this makes this claim sound like an independent study from a third party. It's not. Catchpoint allows you to configure their tools for your needs, meaning you could use them to create a test based on a fair and rigorous benchmark standard, or you could use them to tell the story you want to tell.

OK, so what's wrong with their tests?

The design and execution of Cloudflare’s tests were flawed in several ways:

Cloudflare used a curated selection of Catchpoint nodes in the tests. There's no explanation of why this specific set of nodes was chosen, but it's worth noting that Cloudflare's infrastructure is not in exactly the same places as ours, and a biased choice of test locations affects the result dramatically.

Their tests compare JavaScript running on Cloudflare Workers, a mature, generally available product, with JavaScript running on Compute@Edge. Although the Compute@Edge platform is now available for all in production, support for JavaScript on Compute@Edge is a beta product. We clearly identify in our documentation that beta products are not ready for production use. A fairer test on this point would have compared Rust on Compute@Edge with JavaScript on Cloudflare Workers, which are at more comparable stages of the product lifecycle.

Cloudflare used a free Fastly trial account to conduct their tests. Free trial accounts are designed for limited use compared to paid accounts, and performance under load is not comparable between the two.

Cloudflare conducted their tests in a single hour, on a single day. This fails to normalize for daily traffic patterns or abnormal events and is susceptible to random distortion effects. If you ran several sets of tests at different times of day, it’s likely that at some point, you’d achieve your desired outcome.

The blog post states that the test code "executed a function that simply returns the current time" but then goes on to show a code sample that returns a copy of the headers from the inbound request. One of these must be wrong. It's impossible to objectively evaluate or reproduce a result when the test methodology is not clearly explained.

Solely evaluating time-to-first-byte (TTFB) using tests that involve almost no computational load, no significantly sized payloads, and no platform APIs does not measure the performance of Compute@Edge in any meaningful way.

This is bad science. So why are we drawing attention to it, and since we are choosing to do so, what is the performance of Compute@Edge really like?

Surprise! Compute@Edge is faster than Cloudflare Workers

To be clear, we can't actually say that for sure because the TTFB metric doesn't really tell you which platform is “faster” (more on that in a minute), but we can say that in a less biased version of the same tests, our network and Compute@Edge scores better than Cloudflare's network and Cloudflare Workers, even on TTFB.

It's difficult to measure the relative performance of edge networks. We've moved beyond the CDN era; today, you can’t use a single variable to judge the value of an edge network. Cloudflare in particular should know this because they have an admirable set of capabilities at the edge — none of which were used in this exercise.

The distracting nature of this kind of “benchmark” makes us wary of participating in a comparison on anything resembling similar terms, and it's impossible for us to define our own terms for a comparison because Cloudflare’s terms of use prohibit benchmarking of their services:

So instead, we ran the same tests (echoing headers and measuring TTFB via Catchpoint) against our own platform, but with a few key differences:

We used 673 Catchpoint nodes instead of 50, over a much longer period of time (one week instead of one hour).

We used a Wasm binary compiled from Rust rather than JavaScript.

We used a paid Fastly account instead of a free trial account.

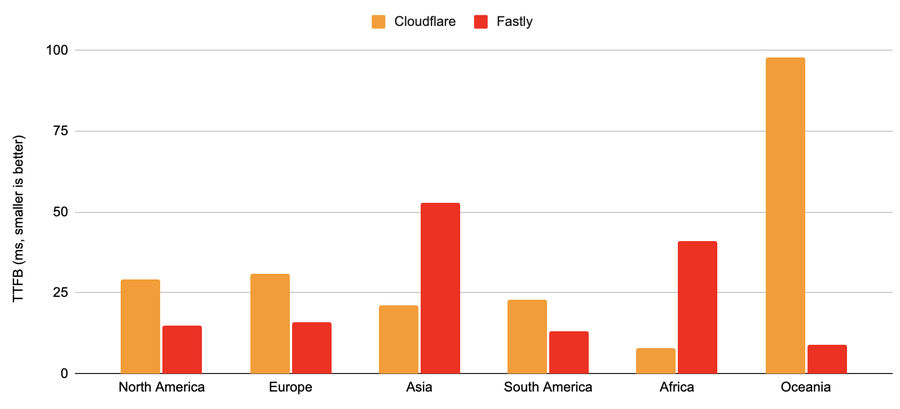

You can view the raw results yourself, but the bottom line is that, on these fairer tests, our network and Compute@Edge is faster than Cloudflare's network and Workers in four out of six regions, even on this flawed metric:

Fastly data: Median TTFB, 673 catchpoint nodes, 378,000 requests, performed from 2021-11-24 00:00 to 2021-11-30 00:00 (6 days) [results] Cloudflare data: Median TTFB, 50 catchpoint nodes, unknown number of requests, performed from 2021-11-08 20:30 to 2021-11-08 22:00 (1.5 hrs) [results]

On the basis of Cloudflare's preferred TTFB, our network running Compute@Edge is nearly twice as fast as Cloudflare Workers in North America and Europe, and 10 times faster in Oceania. Remember, their terms of service prohibit benchmarking, which prevents us from testing them directly and attempting to replicate that outlying Oceania number.

Compute@Edge doesn't have a native language, since we run WebAssembly binaries directly on our edge servers, but Rust is the language that currently offers one of the best combinations of developer experience and performance of compiled code. You absolutely can run anything on Compute@Edge if it compiles to WebAssembly — and people do.

Yeah, but… JavaScript

We know support for JavaScript is important to many customers, but we're not yet satisfied with the performance of Compute@Edge packages compiled from JavaScript. That's why it's in beta. When a product is ready for production, we remove the beta designation.

In creating our edge computing platform, our entire approach has been to chart a course that’s quite different from most of the industry. This means our performance also evolves differently — because the problems we start out with are different.

Measuring the right numbers

Let’s dive into that TTFB metric in a bit more detail and explore why it's not useful in this context.

Cloudflare published the results of tests that don’t measure compute performance in any meaningful way. Instead of measuring a single variable, we should use a benchmark that evaluates the performance of edge compute in core use cases that are important to customers. Network RTT is part of it, but so are variables such as:

Startup time, to hand off a request to customer code

Compute performance ("thinking time") for workloads of various sizes

Cache performance, both for hot objects and long-tail content

RTT to origin servers, for use cases involving origin requests

Cloudflare's tests are even bad at measuring network round trip time — because time-to-first-byte covers both the network RTT and the server think time — and there's no way to understand how each component contributes to the overall number. If you wanted to better understand the performance, you'd separate them.

For example, consider the graphs below, which show medians for both network RTT and TTFB in Asia for a Fastly service. A cache hit served from our VCL platform (left graph) is so fast that the network RTT (blue) is essentially the same as the TTFB (green). Using Compute@Edge (right), the TTFB is distinct, reflecting the increased cost of running arbitrary code — but still, the RTT is the vast majority of the number in regions with generally higher connection latency (Asia in the examples below).

RTT varies based on geography, and we are seeing that variance pretty dramatically in the results of the main test we showed earlier. As a result, what you are not seeing in Cloudflare's tests is a clear picture of the relative performance of our edge compute platforms — the “server think time.”

Building better outcomes for customers

We are working on a benchmark suite that will assess performance in a more realistic environment, and we’ll publish the code for that benchmark suite and have the results measured and published by an independent third party — because it's supremely tiresome to have nerd-sniping competitions which distract from improving the internet for everyone.

In the meantime it is absolutely not true that Cloudflare Workers is 196% faster than Compute@Edge — in fact, it's not faster at all. But the important variables for our customers are the ones that are critical for their business. If you want to know whether we’re fast enough for your particular needs on the metrics you care about, we encourage you to try us and measure for yourself.

This article contains “forward-looking” statements that are based on Fastly’s beliefs and assumptions and on information currently available to Fastly on the date of this article. Forward-looking statements may involve known and unknown risks, uncertainties, and other factors that may cause our actual results, performance, or achievements to be materially different from those expressed or implied by the forward-looking statements. These statements include, but are not limited to, statements regarding future product offerings. Except as required by law, Fastly assumes no obligation to update these forward-looking statements publicly, or to update the reasons actual results could differ materially from those anticipated in the forward-looking statements, even if new information becomes available in the future. Important factors that could cause Fastly’s actual results to differ materially are detailed from time to time in the reports Fastly files with the Securities and Exchange Commission (SEC), including in our Annual Report on Form 10-K for the fiscal year ended December 31, 2020, and our Quarterly Reports on Form 10-Q. Copies of reports filed with the SEC are posted on Fastly’s website and are available from Fastly without charge.