Testing your Fastly config in CI

Principal Developer Advocate, Fastly

Automated testing is a critical part of all modern web applications. Our customers need the ability to test VCL configurations before they push them to a live service. It has long been possible, using our API, to set up a service and push VCL via automation tools, then run a test request and examine the results. However, this has suffered from a few inconveniences:

Setting up a service from scratch requires a reasonably significant number of API calls.

While it only takes a few seconds to set up a service and sync configuration, there is no feedback mechanism to tell you it has deployed, so for the rapid turnaround needed for deploying a config and then testing it, this is inconvenient.

You can’t test any internal mechanisms of Fastly. You can only assert over the externally visible effects of your config. This makes it essentially a “black box” test.

If you don’t tear down the test service afterwards, you may end up leaving open security vulnerabilities in your site exposed to the internet.

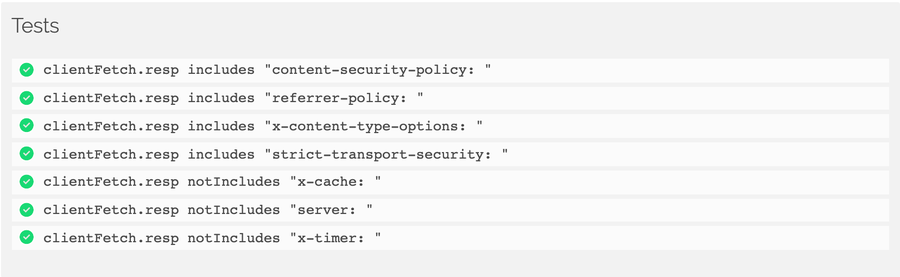

Recently, I wrote about how we've added unit testing to our Fastly Fiddle tool. This provides a succinct test syntax and a wealth of instrumentation data that you can assert against:

Of course, ideally, it would be great to be able to use this capability to perform CI testing, using familiar tools like Mocha.

$ npm test

> mocha utils/ci-example.js

Service: www.example.com

Request normalisation

GET /?bb=2&ee=e2&ee=e1&&&dd=4&aa=1&cc=3 HTTP/2

✓ clientFetch.status is 200

✓ events.where(fnName=recv).count() is 1

✓ events.where(fnName=recv)[0].url is "/?aa=1&bb=2&cc=3&dd=4&ee=e1&ee=e2"

Robots.txt synthetic

GET /robots.txt HTTP/2

✓ clientFetch.status is 200

✓ clientFetch.resp includes "content-length: 30"

✓ clientFetch.bodyPreview includes "BadBot"

✓ originFetches.count() is 0

GET /ROBOTS.txt HTTP/2

✓ clientFetch.status is 404

10 passing (37s)Fastly fiddle exposes an API that represents the results of a request travelling through Fastly as a detailed data structure in JSON. We could receive that and then run assertions on it using an assertion library such as Chai. But we wanted to add support for tests as more of a first-class feature of Fiddle.

With the introduction of built in support for testing, the succinct but expressive test syntax we covered last time can be used to bake test cases directly into the fiddle itself. With the Fiddle HTTP API and an understanding of the data structure we use to represent fiddles, we can drive this test engine remotely using automation. To enable this, I've created an abstraction layer for Mocha. First, let's look at how to use it, then we'll dive under the hood. Before we start, please note that Fastly Fiddle is an experimental service offered as part of our Labs program. Please don’t make this service a critical dependency for your code deploys. Please also keep in mind that individual fiddles are designed to be easily accessed without authentication. Your code could be exposed to anyone in possession of the URL of your fiddle.

Running your own CI tests

First, get the code from GitHub, and install dependencies:

$ git clone git@github.com:fastly/demo-fiddle-ci.git fastly-ci-testing

$ cd fastly-ci-testing

$ npm installThat last step assumes that you have NodeJS (10+) installed on your system. Now if you like you can go ahead and run npm test to run the example tests and see output similar to that at the top of this post (test will take around a minute).

All working? Now, the exciting bit: define your own service configuration and write your own tests. Open up src/test.js and take a look at the top part:

testService('Service: example.com', {

spec: {

origins: ["https://httpbin.org"],

vcl: {

recv: `set req.url = querystring.sort(req.url);\nif (req.url.path == "/robots.txt") {\nerror 601;\n}`,

error: `if (obj.status == 601) {\nset obj.status = 200;\nset obj.response = "OK";synthetic "User-agent: BadBot" LF "Disallow: /";\nreturn(deliver);\n}`

}

},

scenarios: [

...

]

});This first part, inside the spec property, defines the service configuration that you want to push to the edge for testing. At this point it's handy if you have a fiddle set up with the service config that you want to test. If so, click the menu in the fiddle UI and choose 'EMBED' to see a JSON-encoded version of the fiddle. Copy the vcl and origins properties into the spec section of your tests file. Otherwise, you'll find the data model later on in this post.

Now we can set up some test scenarios. Each scenario will test one feature of our configuration. For example, your service might be doing some geolocation, image optimisation, generating synthetic responses, A/B testing, and so on; but you will need to send different patterns of requests to test each feature.

Let's look at one of the example scenarios:

testService('Service: example.com', {

spec: { ... },

scenarios: [

{

name: "Caching",

requests: [

{

path: "/html",

tests: [

'originFetches.count() is 1',

'events.where(fnName=fetch)[0].ttl isAtLeast 3600',

'clientFetch.status is 200'

]

}, {

path: "/html",

tests: [

'originFetches.count() is 0',

'events.where(fnName=hit).count() is 1',

'clientFetch.status is 200'

]

}

]

},

...

]

});This scenario is called "Caching", and is testing that if a request is repeated twice, the second time Fastly will have it in cache. Again, if you have your desired configuration in a fiddle already, you can export the request data via the 'EMBED' option in the fiddle menu (you just need the requests property here), otherwise, later in this post you can find the full data model, minus the test syntax which we talked about in our previous post.

Once you've customised the src/test.js file to your liking, you can run it with npm test. This command triggers the test script from the package.json file, and is equivalent to running this in the root of the project:

$ ./node_modules/.bin/mocha src/test.js --exitNotice that we're running the script using the mocha executable, not just node. This is important, so it's nice that we can use npm test as a shortcut. Also, some CI tools will be smart about detecting that you have a test script declared in package.json and will use that to kick off your testing.

Hopefully, Mocha is now running your tests! If so, congrats! Time to set this up to run on your CI tool of choice, and perhaps create a pipeline to deploy your new config to your Fastly account if it passes.

And now, here's how it works...

Fastly Fiddle client

I made a very small and incomplete client for the Fastly Fiddle tool in NodeJS which is included in the project as src/fiddle-client.js. This exposes methods for publishing, cloning, and executing fiddles:

get(fiddleID): Return a fiddlepublish(fiddleData): Create or update a fiddle, return normalised fiddle dataclone(fiddleID): Clone a fiddle to a new ID, return normalised fiddle dataexecute(fiddleOrID, options): Execute a fiddle, and return result data.

To perform operations on fiddles, we therefore need to understand the fiddle data model and the result data model.

Fiddle data model

Fiddle-shaped objects looks like this (cosmetic properties that are only useful in the UI are omitted):

| vcl | Obj | Map of VCL subroutine names to source code that should be compiled for that subroutine. |

| .init | Str | VCL code for the initialisation space, outside of any VCL subroutine. Use this space to define custom subroutines, dictionaries, ACLs and directors. |

| .recv | Str | VCL code for the recv subroutine |

| .hash | Str | VCL code for the hash subroutine |

| .hit | Str | VCL code for the hit subroutine |

| .miss | Str | VCL code for the miss subroutine |

| .pass | Str | VCL code for the pass subroutine |

| .fetch | Str | VCL code for the fetch subroutine |

| .deliver | Str | VCL code for the deliver subroutine |

| .error | Str | VCL code for the error subroutine |

| .log | Str | VCL code for the log subroutine |

| origins | Array | List of origins to expose to VCL |

| [n] | Str | Origin as a hostname and scheme, eg "https://example.com". Exposed to VCL as F_origin_{arrayIndex}. |

| requests | Array | List of requests to perform when the fiddle is executed |

| [n] | Obj | Object representing one request |

| .method | Str | HTTP method to use for the request |

| .path | Str | URL path to request (must start with /) |

| .headers | Str | Custom headers to send with the request |

| .body | Str | Request body payload (if setting this, a Content-Length header will be added to the request automatically) |

| .enable_cluster | Bool | Whether to enable intra-POP clustering. When enabled, after a cache key is computed, execution is transferred to the storage node that is the canonical storage location for that key. If disabled, processing will happen entirely on the random edge node that initially received the request, and therefore consecutive requests for the same object may not result in a cache hit, since Fastly POPs comprise many separate servers. |

| .enable_shield | Bool | When enabled, if the POP that handles the request is not the designated 'shield' location, the shield location is used in place of the backend, thereby forcing a cache miss to transit two Fastly POP locations before being sent to origin. |

| .enable_waf | Bool | Whether to filter the request through Fastly's Web Application Firewall. If enabled, an additional 'waf' event will be included in the result data, and the request may trigger an error if WAF rules are matched. The WAF used by Fiddle is configured with a minimal set of rules and is not a suitable simulation of your own services' WAF configuration. |

| .conn_type | Str | One of 'http' (insecure), 'h1', 'h2', or 'h3'. |

| .source_ip | Str | The IP from which the request should appear to originate, for edge cloud features such as geolocation. |

| .follow_redirects | Bool | Whether to insert an automatic additional request if the response is a redirect and contains a valid Location header |

| .tests | Str / Array | Newline-delimited list of test expressions, following the test syntax that we introduced in my previous post. Note that although this is a text blob field you may send test expressions as an array if you wish. |

So now we can create, update and execute fiddles. All methods except execute() promise a fiddle object (ie fitting the above model). The execute method promises a result data object.

Result data model

The data promised by the execute() method looks like this. Note that the clientFetches[...].tests property, which is the one we're going to be more interested in here:

| id | Str | Identifier for the execution session |

| requestCount | Num | Number of client-side requests made to Fastly. Will be equal to the number of items in the clientFetches object. |

| clientFetches | Obj | Map of the requests made from the client app to the Fastly service. Keys are randomly generated one-time identifiers which correspond to the reqID property of objects within the events list. |

| .${reqID} | Obj | A single client request. |

| .req | Str | Request headers, including request statement |

| .resp | Str | Response headers, including response status line |

| .respType | Str | Parsed Content-type response header value (mime type only) |

| .isText | Bool | Whether the response body can be treated as text |

| .isImage | Bool | Whether the response body can be treated as an image |

| .status | Num | HTTP response status |

| .bodyPreview | Str | UTF-8 text preview of the body (truncated at 1K) |

| .bodyBytesReceived | Num | Amount of data received |

| .bodyChunkCount | Num | Number of chunks received |

| .complete | Bool | Whether the response is complete |

| .trailers | Str | HTTP response trailers |

| .tests | Array | LIst of tests that were executed for this client request |

| [idx] | Obj | Each test result is an object |

| .testExpr | Str | The test expression, based on the test syntax we learned earlier |

| .pass | Bool | Whether the test passed |

| .expected | Any | The value that was expected (a string representation is also included in the testExpr) |

| .actual | Any | The value that was observed |

| .detail | Str | Description of the assertion failure |

| .tags | Array | Flags applied to the test by the test parser, which currently include 'async' and 'slow-async'. |

| .testsPassed | Bool | Convenience shortcut that records whether all tests defined in the tests property for this request passed. |

| originFetches | Obj | Map of the requests made from the Fastly service to origins. Keys are randomly generated one-time identifiers which correspond to the vclFlowKey property of objects within the events list. |

| .${vclFlowKey} | Obj | A single origin request |

| .req | Str | HTTP request block, containing request method, path, HTTP version, header key/value pairs and request body |

| .resp | Str | HTTP response header, contains response status line and response headers (not the body) |

| .remoteAddr | Str | Resolved IP address of origin |

| .remotePort | Num | Port on origin server |

| .remoteHost | Str | Hostname of origin server |

| .elapsedTime | Num | Total time spent on origin fetch (ms) |

| events | Array | A chronological list of events that occured during the processing of the execution session. |

| [idx] | Obj | Each array element is one VCL event |

| .vclFlowKey | Str | ID of the VCL flow in which the event occurred. A VCL flow is the innermost correlation identifier. Every one-way trip though the state machine is one VCL flow, therefore for each vclFlowKey there will be a maximum of one event per fnName. VCL flows may trigger an origin fetch, so if there was an origin fetch associated with this VCL flow, it will be recorded in the originFetches collection with this identifier. |

| .reqID | Str | ID of the client request that triggered this event. A client request may trigger multiple VCL flows due to restarts, ESI, segmented caching or shielding. The details of the client request will be recorded in the clientFetches collection with this identifier. |

| .fnName | Str | The type of the event. May be 'recv', 'hash', 'hit', 'miss', 'pass', 'waf', 'fetch', 'deliver', 'error', or 'log' |

| .datacenter | Str | Three letter code identifying the Fastly data center location in which this event occurred, eg. 'LCY', 'JFK', 'SYD' |

| .nodeID | Str | Numeric identifier of the individual server on which this event occurred. |

| .<various> | Any property reported in the fiddle UI for an event will be included in the result data for the event. | |

| insights | Array | A list of warnings and best practice violations that were detected and reported during execution. |

How might this be glued into a test harness? We'll use Mocha, one of the world's most popular test runners.

Mocha test case generator

Mocha uses a fairly conventional mechanism for writing test spec files: A CLI tool is used to invoke a script file, and the CLI will automatically create an environment in which certain functions exist: describe, for creating test suites, and it for specifying individual tests, along with some lifecycle hooks like before, beforeEach, after, and afterEach:

describe('My application', function()) {

let db;

before(async () => {

db = setupDatabase(); // Imaginary function

});

it("should load a widget", async function() {

const widget = db.get('test-widget');

expect(widget).to.have.key('id');

});

});The problem with this is that our test cases need to be expressed as data, not code, so can't be declared in the way shown above. Fortunately, Mocha also allows tests to be created via a conventional constructor, if a Mocha global is imported explicitly:

const Mocha = require('mocha');

const test = new Mocha.Test('should load a widget', function () { ... });Inside a describe() callback, this is an instance of the Mocha Suite object (which is also why it's important not to use an arrow function for the callback). Provided that the callback, when initially executed, synchronously creates at least one test, Mocha will run our before() code. In that callback, we can then cancel the hard-coded test and create some dynamic ones:

const Mocha = require('mocha');

describe('My application', function()) {

it ("has one hard-coded test", function () { return true });

before(async () => {

this.tests = []; // Delete the hard-coded test

this.addTest(new Mocha.Test('satisfies this dynamic test', function () { ... });

});

});So, now we need to populate the dynamic tests from the test spec data. The src/test.js file imports a function that it expects to have a signature of (name, data) so to begin scaffolding src/fiddle-mocha.js, that's what we export:

module.exports = function (name, data) {

// Create a testing scope for each Fastly service under test

describe(name, function () {

this.timeout(60000);

let fiddle;

// Push the VCL for this service and get a fiddle ID

// This will sync the VCL to the edge network, which takes 10-20 seconds

before(async () => {

fiddle = await FiddleClient.publish(data.spec);

await FiddleClient.execute(fiddle); // Make sure the VCL is pushed to the edge

});

...

})

};Each time the exported function is called, we create a test suite representing the service configuration under test. By default, Mocha has a time timeout of 2 seconds, which isn't enough to perform remote tests, so we increase that.

The service configuration can be published to a fiddle in this service-level suite's before() callback. It's also worth triggering an execute because publish will only verify that the config is valid, not that it has yet been successfully deployed to the whole network. execute() will do that.

Now we can create sub-suites for each of the test scenarios:

module.exports = function (name, data) {

describe(name, function () {

...

for (const s of data.scenarios) {

describe(s.name, function() {

it('has some tests', () => true); // Sacrificial test

before(async () => {

this.tests = []; // Remove the sacrificial test from the outer suite

const result = await FiddleClient.execute({

...fiddle,

requests: s.requests

}, { waitFor: 'tests' });

for (const req of Object.values(result.clientFetches)) {

if (!req.tests) throw new Error('No test results provided');

const suite = new Mocha.Suite(req.req.split('\n')[0]);

for (const t of req.tests) {

suite.addTest(new Mocha.Test(t.testExpr, function() {

if (!t.pass) {

const e = new AssertionError(t.detail , {

actual: t.actual, expected: t.expected, showDiff: true

});

throw e;

}

}));

}

this.addSuite(suite);

}

});

});

}

});

};Let's step through this:

For each of the scenarios, we call

describe(), passing the scenario name and setting up a callback, which Mocha will invoke immediately.Define a sacrificial test to ensure that the

before()callback is runIn the

before()callback, eliminate the sacrificial test, and execute the fiddle, patching it with the requests that are defined for this scenario.waitFor: 'tests'tells the API client to only resolve the promise when the test results are available.Iterate over each of the client requests that make up the scenario, and create a test suite for the request. We use the first line of the request header (eg. "GET / HTTP/2") as the name of the suite.

For each of the tests that were run on that request, create a new Mocha Test and add it to the test suite. Pass the relevant properties of the test result into the

AssertionErrorif it was a fail.AssertionErroris a extension of the native JavaScript error object and defines additional properties forexpected,actualandshowDiff. Mocha knows about these properties so can provide a better user experience in the test report.Add the request suite (

suite) to the scenario suite (this)The scenario suite has already been registered with the Mocha runner via a describe call, inside of the service-level suite which is also registered (as the root suite) via a describe call.

Mocha will end up discovering one or more root suites (via calls in test.js to testService), those will each contain one or more scenario suites, and each of those will contain one or more request suites. The request suites contain the tests defined on the request.

Wrapping up

Testing in CI is a great way of avoiding surprises when you activate a new version of your Fastly service, and Fiddle is a convenient tool to help you do it. If you already have a CI process that tests pull requests before you merge them, consider integrating some Fastly service tests as well. I’d love to know how it goes for you, so feel free to post your experience in the comments, or drop us an email.

That said, please don’t make this service a critical dependency for your code deploys. This is an experimental service offered as part of our Fastly Labs program, not subject to any SLA, and we’re providing it to gather feedback.