Many of the world's websites are static, and Fastly’s content delivery network gets those pages from origin to visitors quickly. But what if we took the origin out of the equation?

Are you using a static site generator to build your website? Maybe you’re using a popular framework that outputs static files, like create-react-app, Gatsby, Docusaurus, or Vite. Do you just have some static files that you simply need to serve? With compute-js-static-publish, now you can deploy and serve everything from Fastly's blazing-fast Compute@Edge platform.

The static web is still a thing. Of course, many websites are interactive, but so much of the web — blogs, reference pages, and product details pages to name a few types — are inherently static in nature. The content of the pages are modified or updated in some files or in a content management system, and the website serves up the most recent version of this information.

Some websites use static site generators (like Gatsby), tools that combine source data in Markdown or HTML with templates to build out entire websites. Fastly makes use of static site generators too – for example, Gatsby is used to generate our Developer Hub, which contains all of our tutorials and reference materials, and our Expressly website is generated using Docusaurus. Whichever one you use, these types of generators generally create a bundle of files as their output.

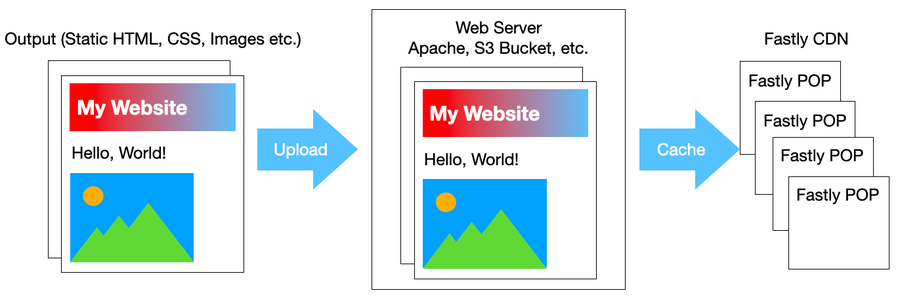

These files are usually uploaded to a web server to be served statically. And these days it's generally a best practice to use a Content Delivery Network (like Fastly's) in front of that server, so you get to cache your website all over the world.

But let’s take a step back. The only thing the web server is doing at this point is storing a complete copy of the website for the CDN to cache. Each POP may contain some subset of the website.

What if there is a way that we can just upload the complete website to Fastly? Every POP will contain every file needed to serve the website, and the origin isn’t needed anymore!

Static Publishing to the Edge

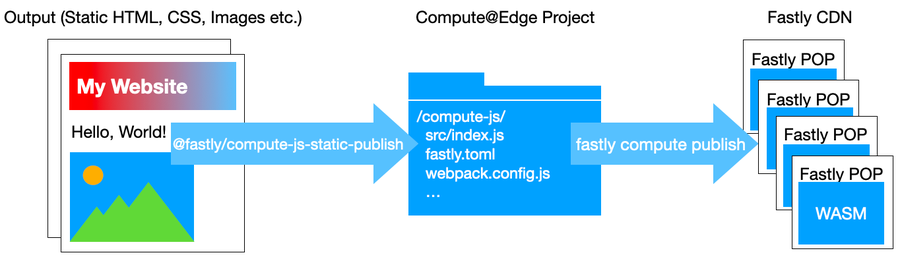

It turns out now we can, with a recent addition to our developer tools. @fastly/compute-js-static-publish is both a command-line tool and a runtime library, with the goal of allowing you to serve your static website entirely at the edge, without the need for an origin server.

Let’s say that you have the files for a website in a directory called public. Type the following command (assuming you have NodeJS 16+ installed):

npx @fastly/compute-js-static-publish --public-dir=./publicThis will generate a Compute@Edge application in a directory called compute-js. The src/index.js file in this generated project will contain the code to serve the static files.

You can now test your site in Fastly’s local development server. Assuming you have the Fastly CLI installed, type the following:

cd ./compute-js

fastly compute serveIt should now be possible to browse to your website by visiting http://localhost:7676/.

Finally, when you’re ready to go live, use these commands:

cd ./compute-js

fastly compute publish

Each time you build your Compute@Edge project (by running fastly compute serve or fastly compute publish), compute-js-static-publish will scan your public directory and regenerate the Compute@Edge program.

Where did all the static files go?

Everything in your source folder is compiled into a single Wasm binary that we can deploy to Fastly..

The secret here lies in that generated src/statics.js file. If you peek inside, you’ll see some lines that kind of look like this (the exact contents will vary based on the files included in your project):

import file0 from "../../public/index.html?staticText";

import file1 from "../../public/main.css?staticText";

export const assets = {

"/index.html": { contentType: "text/html", content: file0, module: null, isStatic: false },

"/main.css": { contentType: "text/css", content: file1, module: null, isStatic: false },

};

It’s these lines that cause the static files to end up in the built module, by utilizing a feature of Webpack called asset modules. This feature causes modules to be created for each specified file, whose value is the contents of each referenced file. Each time the application is built, this tool scans the public directory and regenerates this src/statics.js file so that it lists all the files in that directory. Each file’s contents ends up in the assets object exported by this file, where the keys are the file names of each file, relative to the project directory.

So if we want to know the contents of the index.html file, all we need to do is get the value of assets['/index.html'].content.

For binary (non-text) files, the process is slightly more complicated, but the general idea is the same. If it interests you, I’d encourage you to read about asset/inline in Webpack, as well as read the generated code created by this tool to find out how we’re handling binary files.

As a result, with @fastly/compute-js-static-publish, your files end up all in the Fastly POPs with no origin, so you can picture something like this:

The only other thing you have to be careful about is that there are limits and constraints on what is allowed for a Wasm binary on Compute@Edge, in particular, you need to be careful so that your compiled package side does not exceed 50MB. This is a compressed package size, so you may be able to include more than 50MB of source files, but you should keep the limit in mind.

Integrate all the things

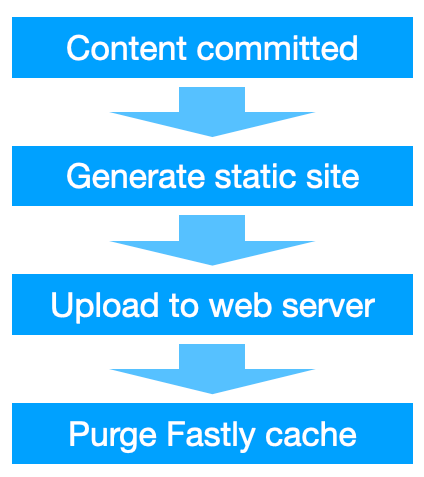

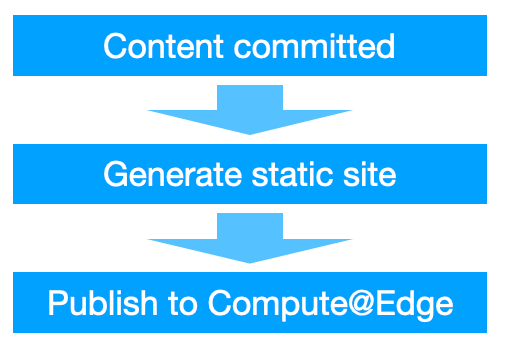

So how will compute-js-static-publish change the way your current site is being built and deployed? If you’re already using Continuous Integration to deploy your site (if not, check out GitHub Actions as well as how to use it with Compute@Edge!), you could publish directly to Fastly from CI.

The compute-js-static-publish command fits right into your deployment pipeline, by allowing you to provide an argument to specify a service ID. If your current setup looks like this:

You’d just be replacing the last two steps like this:

That last step might look something like this:

npx @fastly/compute-js-static-publish --public-dir=/path/to/built/site --service-id=<YOUR_SERVICE_ID>

cd compute-js

fastly compute publish

Notice there’s no more uploading your files to a web server, and since the edge program contains all of your static assets, so no purge step is needed either — as soon as your package has propagated to the Fastly edge nodes, all of them will have the updated content.

Which static site generator do you use? We have a preset for that.

While we were designing this tool, we got some internal feedback from people using various site generators. In order to work with these users, we often had to give instructions based on each type of generator. In general, different site generators produce output in different ways (e.g., different output directory names), but everyone using a specific generator will be using the same settings. So we decided to bake in the concept of presets — default settings for a specific generator, but of course with the ability to override individual settings as necessary.

Let’s say that you use create-react-app to bootstrap your application. You would use their npm run start command to start the dev server, and then when you’re ready to go live, you run npm build. This places all of the output files in a directory called build. It also puts static files (files with hashed filenames that can be cached forever) in a directory called build/static. So rather than having to provide these directory names to the compute-js-static-publish command, you can simply run it as so:

npx @fastly/compute-js-static-publish --preset=create-react-appRight now, we've got presets for Vite, SvelteKit, Gatsby, Docusaurus, and even Next.js (Bundle created using the next export command).

Compute@Edge, a platform for everything

Compute@Edge is a general-purpose computing platform with the potential to run just about anything at the edge. And with tools like this, we hope we are able to give you even more ideas of the kinds of things you can do on the platform.

At Fastly, we want to allow you to run even more code at the Edge and develop for it faster. We want to enable you to use a wide range of new and familiar tools to do this. We’d love to know if you’re using these tools, and what you're doing with them. We’d love to hear from you on Twitter!