At last year’s customer summit in San Francisco, we heard from various customers, industry leaders, and Fastly engineers on themes like the future of the edge, solving (almost) anything in VCL, and the key to successfully growing teams.

In this post, I’ll recap a talk given by Alex Russell, a software engineer on the “performance obsessed” Google Chrome team. At the time of his talk, they had over 120 current performance projects underway — Alex focused on service workers and progressive web apps, illustrating how to provide reliable offline experiences and avoid the dreaded “Uncanny Valley.”

Service workers: a programmable, in-browser proxy that you control

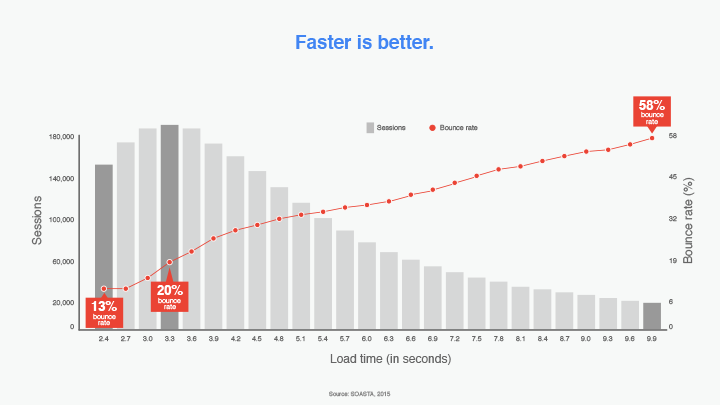

“We know that faster is better,” Alex pointed out. “It’s corroborated with data by dozens of studies both internally and externally.”

The issue is, it’s not always clear what we mean by “faster.” We mean faster when a page loads, but we don’t have good data on when a page actually loads. Interactive content is what users want, but it’s hard to get to — the web grew up in a world where speed wasn’t great, but it also wasn’t hugely variable. Today, mobile networks make getting to reasonable variants and latency almost impossible despite the proliferation of mobile devices. Alex pointed out: “The edges have gotten significantly better and the networks we’re connecting across aren’t getting better in the same absolute terms.”

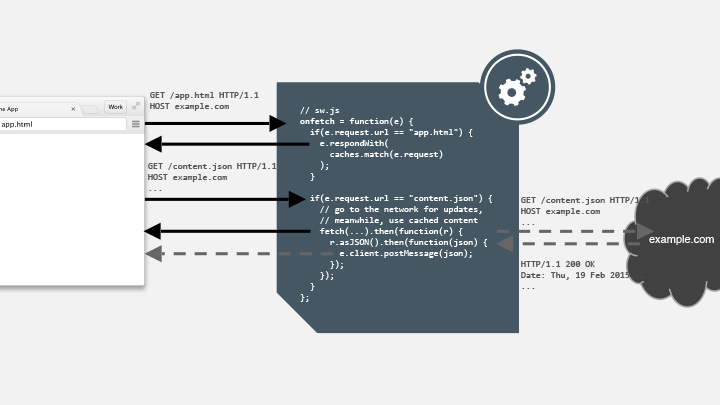

The Chrome team decided to try out service workers, which enable reliable performance by storing content in a new cache API. According to Google’s web team, “A service worker is a script that your browser runs in the background, separate from a web page, opening the door to features that don't need a web page or user interaction.” They give web developers the ability to respond to network requests made by their web applications, allowing them to continue working even while offline, giving developers complete control over the experience.

For example, the first time users visit example.com a service worker gets installed. Every request thereafter is yours to deal with, letting you choose how you design your own reliable offline experience:

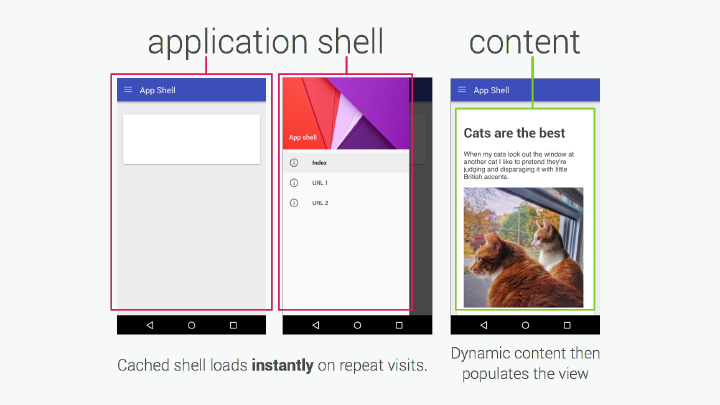

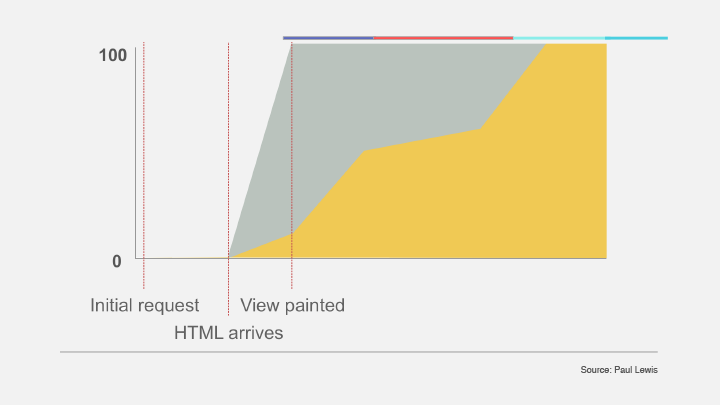

Service workers let you design reliable offline experiences as you would for a native application — rendering a shell instantly that then gets loaded with content:

This way you can treat your application construction like Javascript frameworks have been treating application construction for “a number of years:” load the framework, load the app, and then the app loads data.

What’s next: foreign fetch service workers

Currently, a service worker can only intercept requests to third parties if they’re permitted by the cross-origin policy for that host. Therefore, the only way a third party can leverage the benefits of service workers is to integrate with those from the root domain. And even then, visibility into requests for that third party are limited due to cross-origin policies. To address this, the Chrome team is working on foreign fetch service workers, which empower third parties to handle these requests with their own service worker, allowing for full visibility and optimization across sites that use the same third-party service. With foreign fetch, a service worker can opt in to intercepting requests from anywhere to resources within its scope, preventing requests from hitting the network that would do so otherwise — for example, recognizing a request for a font from a CDN and routing it to a shared, client-side cache that other origins are using.

Now, third parties like font CDNs, analytics services, and ad networks can all implement their own service workers, leveraging their full power without having to do deep integration with those deployed by first parties.

Reaching your users with progressive web apps

In addition to reliable performance, you need a way to reach your users, and it’s far easier to get them into experiences with a link than with an app store pointer. Alex used the example of The Washington Post’s progressive web app (PWA): from wapo.com, mobile users can add The Washington Post to their home screens, making it easy to get to without having to download anything from the app store.

PWAs allow you to provide an engaging experience for your users without having to build something multiple times. If you’re interested in building your own PWA, you can check out The Washington Post’s metadata here: washingtonpost.com/pwa/manifest.json and Chrome’s Lighthouse tools help you verify you’ve created an app-like experience for your PWA.

Less time to interactivity for better performance

In order to follow best practices for performance when building PWAs and otherwise, the Chrome team goes by the Rail performance model:

Respond: 100ms

Animate: < 8ms

Idle work in 50ms chunks

Load: 1,000ms to interactive

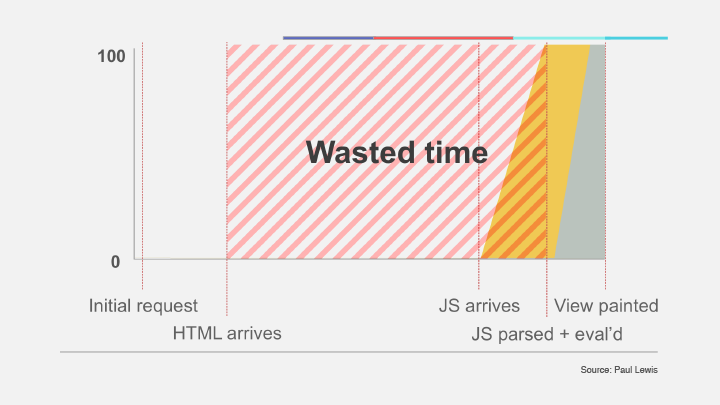

With traditional websites, there’s “a lot of wasted time” waiting on the network:

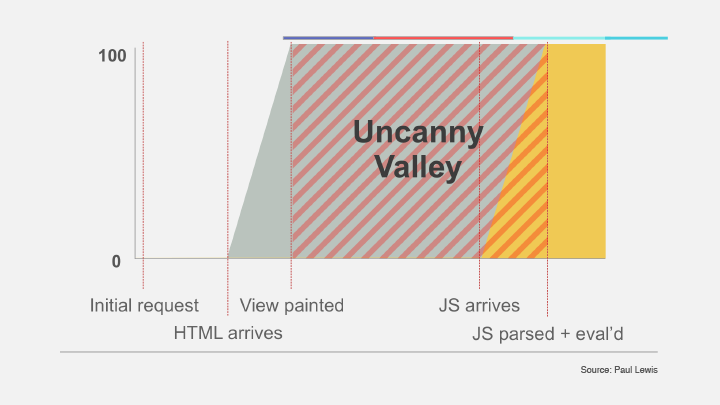

Today, people are building applications with server-side rendering of Javascript apps; HTML comes out immediately, then the Javascript loads, and then you can actually use the site. This period of being unable to interact with a site is referred to by Google’s Paul Lewis as the Uncanny Valley.

What we really want is the ability to use “all the bits onscreen” as the site arrives and paints.

“Interactivity is what users actually care about,” Alex pointed out. “You don’t want the Uncanny Valley where you don’t trust the UI.”

To demonstrate the ideal page load, Alex showed us shop.polymer-project.org — as expected, the page loaded quickly, but everything that showed up was also interactive. Although there’s a lot of script time total, most of it is “chunked,” so the site stays responsive. The chunks arrive as the user needs them, creating a smooth user experience and eliminating the Uncanny Valley.

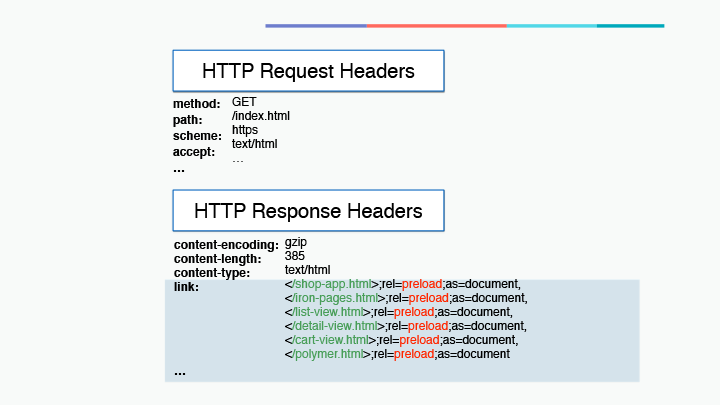

Delivering these chunks is even easier with HTTP/2. Using the protocol’s server push feature, you can proactively “push” subresources to the client in parallel, sending transitive dependencies for top-level content as soon as you know what’s coming down the pike; e.g., if you get index.html, you can start sending responses with link=preload through an HTTP/2-capable server side (“like Fastly”):

As soon as the initial response gets started and sent out to the browser, you can group the subresources you know you’re going to need down to the client:

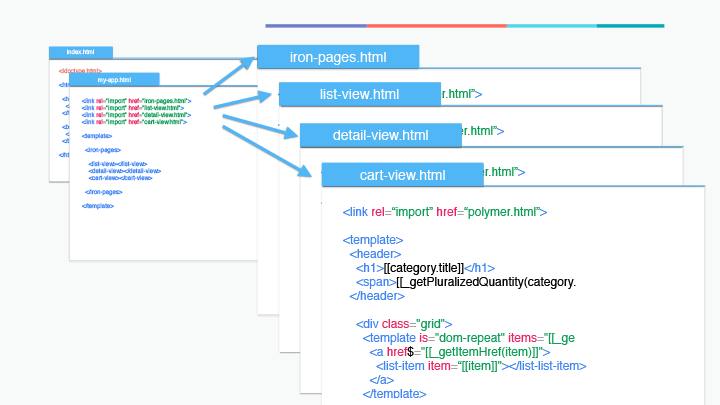

For example, the components shown above can start to be sent as soon as you grab the initial response, without smushing them into a single, overlapped file, making invalidation a lot easier.

You can lazy load and create a secondary route with selective upgrade — a clever trick the Polymer team came up with to make components appear only when you really need them. When the user decides to go to another section, that thing that was already in your document suddenly gets instantiated because they switched the view.

In order to avoid the Uncanny Valley when structuring and serving PWAs, the Polymer team developed the PRPL Pattern as a guide:

Push resources for initial route

Render the initial route ASAP

Pre-cache code for remaining routes

Lazy-load & create next routes on demand

Alex advised everyone to check out the Polymer app toolbox to give it a try, and provided a list of resources (below) to further explore service workers and PWAs: “I can’t wait to see how much faster we make the web together.”

Resources:

Lighthouse: github.com/GoogleChrome/lighthouse

Offline Web Applications Udacity Course: udacity.com/course/offline-web-applications-ud899

Polymer App Toolbox: polymer-project.org/1.0/toolbox/

The Washington Post PWA: wapo.com/pwa

“Shop” PRPL PWA: shop.polymer-project.org

Other great PWA experiences: pwa.rocks

Progressive Web App codelabs: developer.google.com/web/progressive-web-appsyoutube.com/ChromeDevelopers

Watch the video of Alex’s talk below, and stay tuned — we’ll continue to recap talks from Altitude 2016 and beyond.