In a previous post, HashiCorp’s Seth Vargo introduced the Terraform infrastructure-as-code tool for building, changing, and versioning infrastructure. He went into detail on how to create and manage a Fastly service which caches a static website from an AWS S3 bucket using Terraform. In this post, I’ll cover two cases using Terraform with Fastly: first we’ll create and manage an originless service and then we’ll create and manage a Google Compute Engine instance with a Fastly service in front of it.

Computers are great at correctly repeating complicated tasks, so I make sure to delegate that work to software, leaving more time for me to work on delivering solutions. I’ll write tests and code, and let software take it from there. Here are a few things I automate (and how they help):

Unit tests help verify the correctness of software modules

Continuous integration verifies that software modules integrate

Configuring machines verifies that the operating systems and modules integrate

When it comes to building blocks that are larger than individual servers — such as DNS providers, cloud computing providers, or CDN providers — the blocks tend to have APIs and specialized tooling but tools for orchestrating configurations over services run by multiple providers are rare. Luckily, Terraform is exactly that tool. With Terraform, you declare what situation you would like to end up with and Terraform makes the changes necessary to make it happen.

Originless Fastly

First, we’ll set up a Fastly service without an origin server: instead of pulling from an origin service and caching HTTP responses from it, we will generate a synthetic HTTP response in Fastly using Varnish Configuration Language (VCL).

As we generate the HTTP response on every request, we can make it dynamic and have it depend on any information on the HTTP request by the client. As an example, Fastly provides access to a geolocation database based on the IP address of the client. We’ll use the geolocation database to look up the country name and generate a simple HTML page which greets the user and shows the country that they are coming from.

We’ll code the logic in VCL; I’ve shortened this example for demonstration purposes — for real services you should base your Custom VCL on the Fastly VCL boilerplate. The logic is as follows: as soon as we receive a request, we jump to another subroutine where we generate a synthetic HTTP response based on the country name associated with the IP address of the request.

sub vcl_recv {

error 800;

}

sub vcl_error {

if (obj.status == 800) {

set obj.status = 200;

set obj.response = "OK";

set obj.http.Content-Type = "text/html; charset=utf-8";

synthetic "Hello, " + geoip.country_name.utf8 + "!";

return (deliver);

}

}We’ll need some Terraform configuration to set up this service:

provider "fastly" {

api_key = "${var.fastly_api_key}"

}

# Create a Service

resource "fastly_service_v1" "myservice" {

name = "${var.domain}"

domain {

name = "${var.domain}"

}

default_ttl = 10

force_destroy = true vcl {

name = "main"

main = "true"

content = "${file(var.vcl)}"

}

}

As Seth explained in the previous post, the workflow with Terraform is to “plan” the changes as a dry-run to see what would be changed and then to “apply” the changes.

If we run “terraform apply” and pass in appropriate variables, a few seconds later the service is brought up and live across the Fastly network.

When I visit the test URL from London I receive: “Hello, United Kingdom!” and when I visit it from San Francisco I receive: “Hello, United States!”

Because it only takes a few seconds to roll changes out globally, changing the VCL and re-running “terraform apply” is a nice way to iteratively make changes and test the outcome.

Google Compute Engine origin

Most websites require a little more computation than VCL currently allows, such as requiring access to a large, fast, relational database or advanced programing language modules. As an example, I would like to generate a QR code which contains the request URL. I’ll walk through the configuration to start and configure a Google Compute Engine firewall and instance. I’ll then start and configure a Fastly service which uses the instance as its origin.

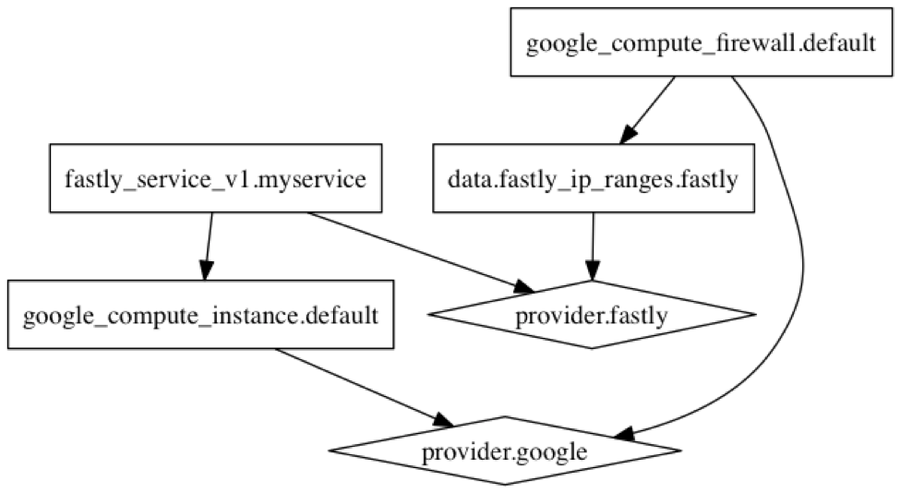

This example is a perfect fit for Terraform: it’s more involved and has dependencies between Google Cloud and Fastly. “terraform graph” can generate a graph which represents the services created and dependencies between them; here you can see that the Fastly service depends on the Google Compute instance:

We’ll generate the QR code with Node.js, which will take the HTTP request host and URL and generates a QR code in SVG format:

const http = require('http');

const qr = require('qr-image');

const server = http.createServer((req, res) => {

const url = 'http://' + req.headers.host + req.url;

const code = qr.image(url, { type: 'svg' });

res.setHeader('Content-Type', 'image/svg+xml');

res.setHeader('Cache-Control', 'max-age=60');

code.pipe(res);

});

server.listen(8000);Now we need the Terraform configuration for the service. We reference the GCE instance IP address as our origin and compress the SVG coming through the service. Take note of the Fastly cache node IP address ranges — we’ll use these later on to only allow access to the GCE instance from Fastly cache nodes.

# Configure the Fastly Provider

provider "fastly" {

api_key = "${var.fastly_api_key}"

}

# Fetch IP address ranges

data "fastly_ip_ranges" "fastly" {}

# Create a Service

resource "fastly_service_v1" "myservice" {

name = "${var.domain}"

domain {

name = "${var.domain}"

}

backend = {

address = "${google_compute_instance.default.network_interface.0.access_config.0.assigned_nat_ip}"

name = "Origin"

port = 80

}

gzip {

name = "file extensions and content types"

content_types = ["image/svg+xml"]

}

default_ttl = 60

force_destroy = true

}The second part of the Terraform configuration is to set up the GCE instance. We’ll also create a firewall so that the instance only accepts traffic from Fastly cache nodes.

# Configure the Google Cloud provider

provider "google" {

credentials = "${file(var.account_json)}"

project = "${var.gcp_project}"

region = "${var.gce_region}"

}

# Create a new GCE instance

resource "google_compute_instance" "default" {

name = "lbrocard-terraform"

machine_type = "${var.gce_machine_type}"

zone = "${var.gce_zone}"

tags = ["www-node"]

disk {

image = "${var.gce_image}"

}

disk {

type = "local-ssd"

scratch = true

}

network_interface {

network = "default"

access_config {

// Ephemeral IP

}

}

scheduling {

preemptible = true

}

metadata_startup_script = "${file("scripts/install.sh")}"

}

resource "google_compute_firewall" "default" {

name = "tf-www-firewall"

network = "default"

allow {

protocol = "tcp"

ports = ["80"]

}

source_ranges = ["${data.fastly_ip_ranges.fastly.cidr_blocks}"]

target_tags = ["www-node"]

}With the appropriate variables and install scripts, if I run “terraform apply” then Terraform will in parallel bootstrap a GCE firewall, a GCE instance hooked up to it, and a Fastly service hooked up to that. This takes a minute or so.

Then, when I visit the test URL I receive a QR code that, when scanned, gives the test URL:

After I’ve finished testing, a “terraform destroy” will tear down the Fastly service, GCE instance, and GCE firewall.

As Seth illustrated, Terraform is a great tool for configuring and managing Fastly services, making it easy to use the Fastly API without needing to know any of its details. Fastly + Terraform helps you automate and deploy seamlessly, orchestrating multiple cloud services into one perfect concert.

Interested in learning more about Fastly + Terraform? Join HashiCorp’s Seth Vargo and our own Patrick Hamann’s workshop on CI/CD at Altitude NYC.