If your application is on the internet, chances are it has been subjected to nefarious automation. These events can include many different attacks – including content scraping, credential stuffing, application DDoS, web form abuse, token guessing, and more. The software that automates these tasks are considered bots, and the malicious use of automated tooling can be categorized as automated threats. This type of nefarious automation can originate from a wide range of actors, usually cybercrime organizations but occasionally from an individual seeking personal gains, too (like eBay bid sniping).

To understand and mitigate nefarious automation before it impacts your business, it’s essential to be cognizant of adversarial tactics and techniques. Emulating both simple and complex automated attacks is an important capability that you can use to gain further insights and validate that security controls operate the way you believe they should.

In this blog post we’ll cover different categories of bots, methods, and tools red teams can use for testing as well as how to choose between them and how to build on their functionality to increase sophistication. Additionally, we will cover defensive security measures that make attacks more difficult and costly.

Non-Browser-Based Clients

The most conventional form of automation leverages some type of non-browser-based client like Python, Go, or curl, among many others. These types of bots can range from “off-the-shelf” vendor tooling to open source projects and can be used for scraping data, automated pentesting, and much more. For instance, searching #pentest on GitHub should yield some interesting results.

The snippet below shows an example of a bot developed in Python that leverages the requests and BeautifulSoup libraries for scraping and parsing data from a website:

import requests

from bs4 import BeautifulSoup

page = requests.get(url='https://example.com')

content = BeautifulSoup(page.content, 'html.parser')In contrast to a normal browser, non-browser-based clients like this script fail to execute Javascript code. Advanced anti-bot solutions use Javascript to automatically collect and report attributes related to the browser and system configuration. This is referred to as client-side detection. If this data fails to be sent back in the client requests, it’s a good indication that automation is at play.

Browser Automation Frameworks

Even though non-browser-based tools can be extremely useful for attackers, they’re often easier for defenders to detect. One of the biggest challenges for bot developers is security solutions that keep identifying and blocking them. To avoid being detected, the best bet is to mimic human traffic.

Browser automation frameworks like Puppeteer and Playwright have become go-to solutions for creating human-like bots. They support executing Javascript code because they leverage real browsers. They can automate a complete set of tasks to emulate a real user, such as loading a page, moving the mouse, or typing text.

You can use these automation frameworks to instrument browsers with or without a graphical user interface (GUI). Browsers without a GUI, referred to as “headless” browsers, have the same functionality as real browsers but they need much less CPU and RAM since they don’t actually have to manipulate graphics or other user interface components. The most popular headless browser is Headless Chrome.

The snippet below is an example of a Puppeteer script that navigates to https://example.com/ and saves a screenshot as example.png in headless mode:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com/');

await page.screenshot({ path: example.png' });

await browser.close();

})();Even though writing code can be fun, developing these scripts requires skills and time. Luckily, there are tools available that can help. For example, Google introduced Recorder, available in Chrome Developer Tools (starting in Chrome version 97), which allows you to record and replay user flows within the recorder panel as well as export it to a Puppeteer script without writing a single line of code.

Bots for Purchase

When thinking about attackers, you may often fall back on the stereotype of an evil genius who can crack into systems in minutes with a few lines of code. While this stereotype may overlap with some truth, it’s mostly exaggerated and unrealistic. In reality, cybercriminals often look for the path of least resistance. Bots are notoriously difficult to set up and run, and most bad actors would prefer to reach their objectives as quickly as possible. So, as you might have guessed, there’s a marketplace for buying and selling bots.

One industry where we see this thrive is retail, with “sneaker bots.” When sneakers are released in limited quantities, it’s often a race to see which sneakerhead can checkout the fastest before they sell out. Sneaker bots are designed to make this process instantaneous, providing an advantage over other buyers completing their transactions manually. These kinds of scalping bots transcend beyond sneakers and are used to purchase any item with limited availability.

These bot services are often not illegal, and makers often announce releases of their products on Twitter. However, they do often violate the terms and conditions defined by many websites. They can negatively affect brand reputation and brand loyalty by artificially harming a customer’s ability to buy a product as well as imposing increased infrastructure costs and degraded site performance by overloading a site.

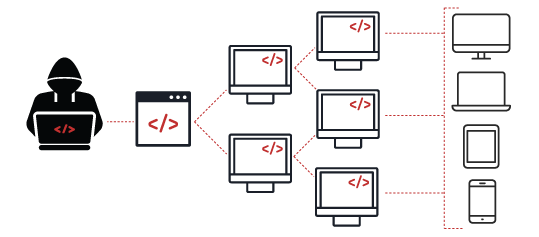

Other types of bots that are commonly for sale are botnets, a group of machines that are infected with malware. Forums similar to RaidForums, a cybercrime forum that was recently seized, have been reported for buying and selling botnets. Botnets can grow to encompass a massive quantity of bots. A large botnet consisting of millions of machines might be used to launch a distributed denial-of-service (DDoS) attack, while a small botnet might pull off a targeted intrusion to a valuable system, such as government or financial data.

Emulating Nefarious Automation

So far we have presented different categories of bots, including non-browser based clients, bots that leverage headless browsers, and bots you can purchase. In this section, we’ll cover methods and tools red teams can use, how to choose between them, and how to build on their functionality to increase sophistication.

Phase 1

Let’s start with our first example of a bot developed in Python that leverages the requests and BeautifulSoup libraries for scraping and parsing data from websites:

import requests

from bs4 import BeautifulSoup

page = requests.get(url='https://example.com/')

content = BeautifulSoup(page.content, 'html.parser')This is a very basic script that makes a GET request and returns a BeautifulSoup object. Let’s run this script and take a look at the HTTP request that was sent:

GET / HTTP/2

Host: example.com

User-Agent: python-requests/2.28.0

Accept-Encoding: gzip, deflate

Accept: */*

Connection: keep-aliveThere are a few things to point out from this request. If we look at the User-Agent header, notice how it’s advertising python-requests/2.28.0. Also, the Accept header is set to */*. The Accept header is used to let the server know what types of content the client is able to handle. The */* directive accepts any kind of type.

Now let's make the same request in a Chrome browser:

GET / HTTP/2

Host: example.com

Sec-Ch-Ua: "-Not.A/Brand";v="8", "Chromium";v="102"

Sec-Ch-Ua-Mobile: ?0

Sec-Ch-Ua-Platform: "macOS"

Upgrade-Insecure-Requests: 1

User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.61 Safari/537.36

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

Sec-Fetch-Site: none

Sec-Fetch-Mode: navigate

Sec-Fetch-User: ?1

Sec-Fetch-Dest: document

Accept-Encoding: gzip, deflate

Accept-Language: en-US,en;q=0.9Clearly, there’s quite a difference between the two requests. Not only are the User-Agent and Accept headers different, but there are also nine additional headers that appear in the browser request compared to the bot developed in Python. These features are used in fingerprinting-based approaches to classify whether a user is a human or a bot – a defender can quickly use this self-advertising information to block bot traffic.

With that in mind, we can modify our Python bot to resemble the request that was made through our browser:

import requests

from bs4 import BeautifulSoup

headers = {

'Sec-Ch-Ua': '"-Not.A/Brand";v="8", "Chromium";v="102"',

'Sec-Ch-Ua-Mobile': '?0',

'Sec-Ch-Ua-Platform': 'macOS',

'Upgrade-Insecure-Requests': '1',

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.61 Safari/537.36',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Sec-Fetch-Site': 'none',

'Sec-Fetch-Mode': 'navigate',

'Sec-Fetch-User': '?1',

'Sec-Fetch-Dest': 'document',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'en-US,en;q=0.9',

}

page = requests.get(url='https://example.com/')

content = BeautifulSoup(page.content, 'html.parser')Let’s run this script and take a look at the HTTP request that was sent:

GET / HTTP/2

Host: example.com

User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.63 Safari/537.36

Accept-Encoding: gzip, deflate

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

Connection: keep-alive

Sec-Ch-Ua: "-Not.A/Brand";v="8", "Chromium";v="102"

Sec-Ch-Ua-Mobile: ?0

Sec-Ch-Ua-Platform: macOS

Upgrade-Insecure-Requests: 1

Sec-Fetch-Site: none

Sec-Fetch-Mode: navigate

Sec-Fetch-User: ?1

Sec-Fetch-Dest: document

Accept-Language: en-US,en;q=0.9At a glance, it looks like all the headers are now included in the request. However, after further examination, the Python script didn’t preserve the order of the request headers. Most libraries use an undefined order, as the RFC specification says it doesn’t matter. However, major browsers send HTTP headers in a specific order, and this subtle difference is another technique that can be used to help identify automated traffic. Fortunately, the Python requests library provides the ability to preserve the header ordering in our script.

Phase 2

As we look to increase sophistication to evade detection, bear in mind security solutions rely on behavioral-based approaches to distinguish bots from humans. Bots usually behave quite differently than legitimate users. To keep track of a user’s behavior during a session, these approaches often link the user’s behavior to an IP address (IP reputation).

Types of behavioral patterns that can be linked to an IP address can include (but are not limited to):

Total number of requests

Total number of pages visited

The time between page views

The sequence in which pages are visited

Types of resources loaded on pages

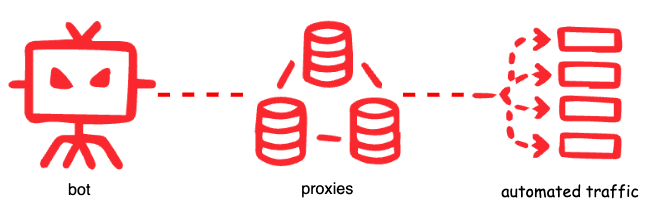

Building on our example script, if we wanted to scrape large amounts of data, we could get detected or blocked through one of these patterns. As such, we’ll look to rotate through IP addresses every few tries to avoid getting rate-limited or blocked. This can be achieved by using a proxy server. A proxy acts as an intermediary between a client and the rest of the internet. When a request is sent through a proxy, it exposes the proxy’s IP address, masking the IP of the originating client (or bot).

The type of proxy you will require depends on the restrictions you’re trying to bypass. IPs belonging to datacenters, VPNs, known proxy servers, and Tor networks are much more likely to stand out during analysis or have already been flagged as suspicious. That being the case, the majority of sophisticated bots tend to leverage lesser known residential proxies. Since residential IPs are used by legitimate users, they have a better reputation compared to other types.

However, before going through the effort of setting up proxy services, check if the target application is configured to trust request headers like X-Forwarded-For, True-Client-IP, or X-Real-IP for specifying the remote IP address of the connecting client. Attackers can take advantage of this by providing a spoofed IP address. In fact, the Fastly Security Research team made a contribution to Nuclei that provides the ability to inject an arbitrary IP address in any particular HTTP request header in an attempt to exploit this flaw and emulate proxy behavior.

Alongside the IP address, TLS fingerprinting has become a prevalent tool for indicating the type of client that is communicating to a site. These fingerprints use supported TLS cipher suites to identify a device. More about TLS fingerprinting can be found in our blog, The State of TLS Fingerprinting. This method of detection has its caveats since the client is in control of the ClientHello packet, allowing a bot to change its fingerprint. Tools such as uTLS can be used to impersonate TLS signatures.

Phase 3

So far the approaches we’ve covered relate to server-side features. A bot solution with only server-side detection has limitations. Unlike non-browser-based bots, advanced bots use browser automation frameworks that leverage real browsers, providing the ability to forge the same HTTP and TLS fingerprints as legitimate users and execute Javascript code.

In order to detect advanced bots, client-side detection is essential. Client-side features include events and attributes such as mouse movements, keys pressed, screen resolution, and device properties that are collected using Javascript. These scripts test for the presence of attributes which are seen in headless browsers or instrumentation frameworks.

For instance, a technique used in client-side detection is to test for the presence of audio and video codecs. The code snippet below checks for a supported media type by using the canPlayType function:

const audioElt = document.createElement('audio')

return audioElt.canPlayType('audio/aac')In the case of a legitimate Chrome browser, it should return the value 'probably'. With this in mind, let’s modify the Puppeteer script that we used in an earlier example to verify it’s returning the value we expect:

const puppeteer = require('puppeteer');

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://example.com');

await page.screenshot({ path: example.png' });

const audioElt = await page.evaluate(() => {

let obj = document.createElement('audio');

return obj.canPlayType('audio/aac');

})

console.log(audioElt);

await browser.close();

})();Unfortunately, it returns an empty value. Alongside of that, Headless Chrome can be identified server-side via its user agent:

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) HeadlessChrome/103.0.5058.0 Safari/537.36By default, Puppeteer doesn't mask features that are obvious detection signals; rather, it exposes the fact that it’s automated. Puppeteer’s primary purpose is for automating website tests, not for creating human-like bots that can evade detection. However, as might be expected, there’s an open source library called puppeteer-extra that supplies a stealth plugin that enables bot developers to modify these defaults easily and applies various techniques to make detection of headless puppeteer harder.

The main difference is simply that you don’t import puppeteer, but puppeteer-extra instead:

const puppeteer = require('puppeteer-extra');

puppeteer.use(require('puppeteer-extra-plugin-stealth')());You should now be able to verify that the bot’s user agent and codecs output matches a legitimate Chrome browser:

const userAgent = await page.evaluate(() => {

return navigator.userAgent;

})

console.log(userAgent);

// Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.5109.0 Safari/537.36

const audioElt = await page.evaluate(() => {

let obj = document.createElement('audio');

return obj.canPlayType('audio/aac');

})

console.log(audioElt);

// probablyThere are still plenty of other ways to detect this orchestrated browser’s behavior, which is why it is important to understand the current limitations of your application’s protections and to be thinking about what you could do to further minimize that risk.

Summary

Detecting and blocking nefarious automation requires considerable analysis and sophistication. Because not all bot traffic is malicious – sometimes it’s expected, like when a business provides client libraries in multiple languages to help facilitate access to their services – it’s crucial to to understand the type of traffic and devices that you expect to connect your infrastructure. What’s good or bad all depends on the environment and what you consider a divergence of known acceptable behavior through the use of automation.

Emulating nefarious automation against your own infrastructure can help teams gain further insights into what is defined as acceptable. By understanding the different categories of bots and experimenting with adversarial techniques, you can be better equipped to mitigate nefarious automation and protect what’s most important to your organization.