Build a network that can scale indefinitely, be managed by a small crew of skilled ops and network engineers, and handle current web traffic and the next generation of protocols. Sounds impossible, right?

Not true.

When planning a major content delivery network, you’d think that it would make sense to put your equipment where the most people are, right?

Not always.

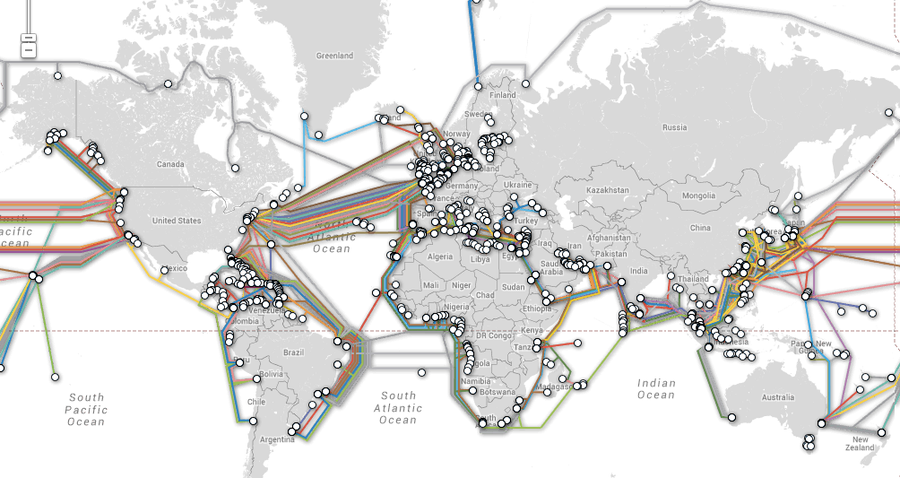

Around the world, Around the world

The world runs on fiber, and fiber is expensive. Running it from country to country, or under the ocean is difficult and takes time, manpower, and money. Plus, there are already some fixed locations—such as Singapore, Frankfurt, and San Jose—that contain the bulk of the incoming fiber links due to their geopolitical power, protected landings, or population density.

At Fastly, we certainly choose our locations based on where the fiber lives and how quickly we can get onto the major backbones that feed “eyeball networks” around the world (eyeball networks are providers such as Comcast, Verizon, Deutsche Telekom, and Telia that service the end users). But speed and fiber aren’t our only considerations.

When our first video-on-demand (VOD) and streaming customers came online, we realized that we needed to re-examine how we forecast future bandwidth needs. In particular, we realized that we need to factor in how our customers use our services, not just where their users are. For example, in Europe, watching computer gaming streams late at night is hugely popular, with hundreds of thousands of people viewing them on their phones and mobile devices. These traffic spikes, which are somewhat unpredictable, put an extra strain on our transit providers.

Use patterns forced us to look at how we could better serve such a large amount of traffic without expanding our footprint to the point of putting a POP in every town in Europe. To do this, we turned to larger, more robust POP designs that give us the ability to scale up with a minimal amount of buildout on our part.

Discovery

Potential growth and capacity needs are just as vital. When projecting 3, 6, or even 12 months out, we look at both our current customers’ usage and how we expect our world to grow. We then plan how we can stay ahead of that projected growth by a comfortable margin.

Once the work of determining the appropriate city or general locale for a new POP is completed, we take a look at the overall capacity that transit providers can provide. Factors such as router diversity, multiple physical fiber paths and other network protections are worked through. This helps us decide on an specific physical location for our datacenter.

We then move on to planning the site, including our rack space, the power needs, any security concerns we have, and any local political considerations that might make it harder to get equipment in and out of a particular country.

Harder, better, faster, stronger

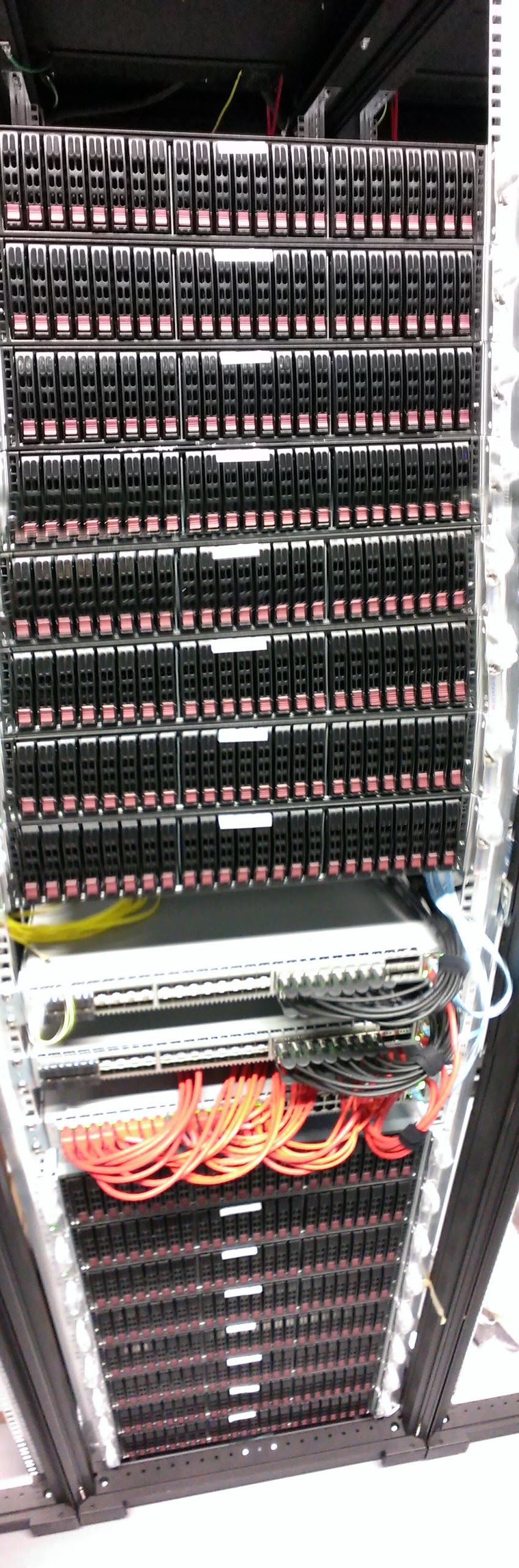

After deciding on a datacenter, the Fastly team negotiates for support services for power, rack and cage space, and out-of-band management internet connections. Anyone who has ever colocated even a single server knows this pain, and I won’t go into it in depth. But just for you stats geeks, our average buildout now involves a 2x 44u rack footprint, with 208v 3-phase power delivery for handling our current load and future needs. We also require two completely out-of-band internet links to these racks to provide us with an emergency access point for maintenance needs.

With our buildouts and our larger full-cage setups, we can put a tremendous amount of tier 1 and tier 1.5 transit into geographically centralized locations to better serve our clients via fast origin pulls, and fast last-mile delivery. The tier 1 providers include Level 3, Cogent, NTT, and Pacnet — the carriers that move most of the internet around that we work and live on today.

One more time

From there, we work with our server vendor to get hardware delivered to our new datacenter. As our network and operations teams have grown, we’ve developed quicker methods of pushing remote images out and standing up multiple racks in the span of mere hours. For most installations, we no longer need to send a Fastly employee to configure hardware properly on site.

This includes our networking gear from Arista. Using both tried-and-true 7150 platform gear, plus their brand new 7300 series chassis, we push the edge of what hardware and software can do to kill every last ms of latency that we can. Using EOS (Arista’s Extensible Operating System) to help power our edge equipment, we’re able to offload some of our networking tasks directly to our borders, without compromising security in the process. Our hardware is able to focus on delivering web content, and it helps reduce our length of maintenance windows. Also, the large backplanes of the Arista hardware allow us to install more transit providers and reach very high bandwidth densities per rack. The modular fashion in which our systems are designed also means we can easily drop in support equipment — be it more caches, video ingest, or additional transit — without needing to take down the whole site in the process.

In the end, we have a solid team of engineers and project managers who spend their time getting these pieces all flowing in the right order and to the right sites, multiple times each month. With our current growth rate, we’re bringing online 1.5 POPs a month, and this will only increase as time goes on. Growth drives growth, and as the internet grows, so will we.

Map Image Source: TeleGeography