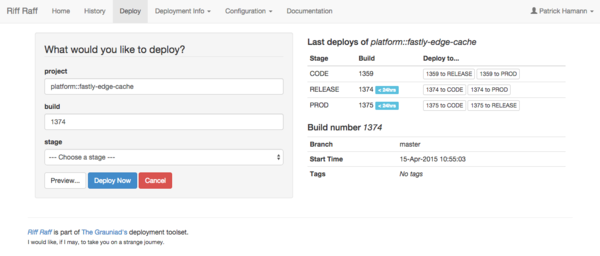

A couple of months ago at a customer dinner in London, Patrick Hamann (then of the Guardian, now with the Financial Times) did a great presentation about the Guardian’s technology stack, their business challenges, and the way the content was generally deployed. One of the slides really caught my attention:

The slide shows Riff-Raff, an open source deployment tool that the Guardian has created and uses for their deployments. In this slide, a number of interesting points are implied:

Fastly is clearly considered to be part of the stack

Fastly configs are part of the normal deployment workflow

The Guardian has iterated with their Fastly configuration over 1,300 times, pointing to the fact that configuration changes aren’t onerous

We often say that Fastly can easily become an extension of your app. Patrick’s slide was a great reminder and a good display of that concept in action. It shows how Fastly can become a part of the stack at the edge, and easily integrate into the day-to-day workflow, with deployment and otherwise.

Since Patrick’s talk, I’ve thought more and more about how Fastly (or any CDN, really) can become an extension of your app and it’s become clear that there are three major components that make this possible: caching, control, and visibility. Below, I’ll break down the high-level concepts of each of these components.

Caching: flexibility, long tail content, and Instant Purge

At its very core, a CDN is a distributed HTTP caching engine, and Fastly is no different. Anyone who’s deployed caching locally in front of their servers can attest to its performance benefits, both for end users and for the infrastructure behind it. It goes without saying, then, that any CDN should naturally extend your internal caching infrastructure to the network edge.

Varnish for flexibility and granularity

Many Fastly customers use Varnish as the caching layer in front of their infrastructures. For them, it’s very natural to think of Fastly as an extension of their caching system, creating a hierarchical caching model that stretches out from the data center and gets content closer to the application users. All caching policies deployed locally at the servers can now be replicated to the edge and the power of caching can be transferred to Fastly’s distributed network of Varnish caches.

Fastly’s integration of Varnish allows our customers to fully realize the ability of this powerful caching engine all the way to the network edge, as close to the end users as possible — regardless of whether they’re using Varnish locally in their datacenters or not. Caching granularity, for example, is vital for having full control over how things are cached. Varnish Configuration Language (VCL) allows you to control your own caching keys. Customized cache keys allow you to do things like cache according to geo location, or normalize user agents in order to cache and serve different content to different clients. This level of control over what is cached, how it's cached, and how it’s served is essential to good edge caching.

Long tail content caching

Then, there's long tail content (cacheable content that is seldom fetched, such as social media posts, profile pictures, avatars, etc.) and how effectively it’s cached at the network edge. One of the founding tenets of Fastly was maximizing cache hit ratios close to the end user, and that meant building our network in a way to cache more things at the edge. With traditional CDN caching architectures, this type of content would often get evicted from the very edge of the network and be cached at dense mid-tier caching layers that were not necessarily close to the end user — or worse yet, evicted from the network altogether, causing requests to go all the way back to origin. But, like all content, serving from anywhere but the edge adversely affects performance since it's not really being delivered from somewhere near the end user anymore. At Fastly, our exclusive use of solid-state drives (SSDs) and the way we’ve architected our points of presence (POPs) allows us to maximize cacheability and enables us to cache and serve more long tail content from the edge. This way, when we talk about cache hit ratios, we talk about the ratio of hits from the edge, not from somewhere deep in the network.

Purging at the edge

Finally, just as we focus on caching content at the edge with great granularity, we also need to focus on uncaching. Purging content from the edge is just as important as caching it there, and is another major area that Fastly separates itself from traditional CDNs. Our Instant Purging infrastructure enables us to purge content from our global network of caches within 150 milliseconds. This level of control over cache consistency enables powerful usage models for Fastly's customers, one of the most important of which is the ability to cache and deliver event-driven content from the edge. We augment this with mechanisms such as instant Soft Purging (invalidating instead of purging) and surrogate keys that provide further purging control to our customers, allowing them to have full control over how their content is cached on the Fastly network and then uncached, if necessary.

So, when it comes to caching, Fastly becomes an extension of your application by letting you cache more and serve more from the edge, while giving you total control over how your content is cached (and uncached) at the edge, closest to your users.

Control: programmability and logic at the edge

Ultimately, control is about programmability. In order to truly integrate a CDN into your application stack, you have to be able to programmatically control its features, configuration, and behavior — in real time. Fastly’s API was one of our first major features and many of our customers continue to use it. Instant Purging, which happens through an API call, is a perfect example of how we give our users control.

The API is full-featured and enables developers and application owners to manipulate all aspects of their configuration. Our customers use one of our many API clients, or write their own code for interfacing with Fastly’s configuration subsystems. Configuration changes through the API are fast — in seconds. This real-time nature is vital for integrating Fastly into normal deployment workflow; if it took four hours to deploy a single configuration change, it would be impossible to iterate with configurations as quickly as you iterate with your app deployments. And Patrick wouldn't be able to present a great story with Riff-Raff!

Without a full-featured API, it’d be nearly impossible for Fastly to act as an extension of your application since lack of programmatic interfaces would make it difficult to automate iterations easily. But the concept of control can go one step further. To truly extend the application to the edge, you should also be able to offload some application logic to the edge. This is where Fastly’s customers can leverage the power of VCL. With VCL, you have great flexibility in deploying complicated logic to the edge to make application-level decisions closer to your clients. VCL lets you generate content at the edge, manipulate HTTP headers, select different origins based on various criteria, change caching rules, apply geo-location policies, force users to secure versions of your application over Transport Layer Security (TLS), choose when to serve stale content, and much, much more. It’s easy to envision Fastly’s distributed platform as just a part of your application when you’re able to deploy these levels of logic closer to your users.

When it comes to fully integrating Fastly into your application, the programmatic interfaces provide real-time interaction with the platform, while VCL lets you run complex logic at the edge — all components that allow your application to extend to the Fastly edge.

Visibility: real-time logging and stats

After extending the caching infrastructure to the edge, programmatically controlling the behavior of the platform for your application in real time, and running logic on the Fastly edge servers, wouldn't it feel futile if you couldn't instantly see what was going on? This is where visibility into your traffic and what your users are doing comes in. Fastly has always believed in providing full visibility into what's happening with the application you've deployed on our platform and, because of this, we provide multiple mechanisms through which you can keep track of what's going on.

Fastly's real-time logging features are extremely useful and allow you to configure logs to be streamed to any number of endpoints. The platform supports standard log delivery protocols like syslog and FTP, cloud storage services like Amazon S3 and Rackspace Cloud Files, and a number of popular logging services like Logentries and Papertrail. Logs are streamed in real time and the contents of the log entry is fully customizable to include any standard logging field, along with any readable Varnish variables available in VCL. Moreover, because logging is controlled through VCL, logging can be conditional. In other words, you can pick and choose when you log, what you log, and where you log to based on logic deployed in VCL. So, for example, you can log one way on a cache miss and a different way on a cache hit. With all log streaming happening in real time, you have visibility into what your users are doing at the edge exactly the same way that you have visibility into your own origin infrastructure. Again, this is a perfect example of how Fastly extends your application to the edge, both in how you do things and how you see things.

Since programmatic interaction is vital to Fastly being considered a real part of your stack, Fastly also offers APIs for visibility. The real-time stats API allows for current stats to be monitored — in fact, the cool network-wide requests/sec dial and the stats around it on www.fastly.com all use the real-time stats API. And as important as real-time stats are, historical stats are also essential to knowing what's happened with your application. Fastly provides access to these statistics through the historical stats API as well.

Providing this level of visibility is an important component for truly stretching your application to the edge. Fastly's log streaming and statistics APIs enable this for our customers. As a matter of fact, many customers have integrated the real-time statistics Fastly provides directly into their own application dashboards — a very good sign that they've embraced Fastly as an extension of their application and stack. Actually, when I sent this post to Patrick to review before we published it, he quickly sent me a screenshot of Next Radiator, an open source application dashboard used at Financial Times.

This is a great example of how Fastly stats can be a part of your application dashboard and give you insight into your traffic (thanks, Patrick!).

Final thoughts

Fastly as a true extension of your application is a powerful concept. Traditional CDNs that behave like opaque black boxes never allow application owners to fully leverage those platforms.

As Ross Paul, CTO of 1stdibs, put it, "What’s dramatically different between using Fastly and other CDNs is that Fastly is not a black box we can’t control. Fastly is part of our infrastructure — it’s almost as if we were to spin up data centers all over the world just to be Varnish nodes; that’s Fastly.”